These two new models were released just days apart. One costs **10-25x as much**, but it is any better? <iframe width="800" height="450" src="https://www.youtube.com/embed/9RD6UztaWe4" frameborder="0" allowfullscreen></iframe> ### 🎥 Watch the Video https://youtu.be/9RD6UztaWe4 ### 🪷 Get the Source Code https://github.com/zazencodes/zazencodes-season-2/tree/main/src/gpt-4.5-vs-claude-3.7-head-to-head ## 🤖 Why Compare GPT-4.5 with Claude 3.7? [](https://www.youtube.com/watch?v=9RD6UztaWe4&t=36) GPT-4.5 and Claude 3.7 **aren't designed for the same purpose**. > The "apples-to-apples" comparison would be to compare GPT-4.5 to Claude 3 Opus, which is the most powerful (and expensive) model from Anthropic. So why pit GPT-4.5 against the "mid-tier" **Claude 3.7 Sonnet**? Two reasons: 1️⃣ **Cost Disparity** — GPT-4.5 is **crazy expensive**. It costs **25× more per input token** and **10× more per output token** compared to Claude 3.7. That means **GPT-4.5 should deliver vastly superior performance** to justify the cost. 2️⃣ **Release Timing** — Claude 3.7 launched, and then **just days later, OpenAI suddenly dropped GPT-4.5**. It felt like OpenAI was trying to steal the spotlight by dropping what has turned out to be (hot take, my opinion, etc..) a half-baked effort to build something amazing. OpenAI likes to do this thing where it launches *right after* someone else. They did this to Google last year following their "I/O" yearly announcement event, and they did it just the other week to Anthropic following their release of Claude 3.7. So, even though it's not a fair fight, I put them head-to-head. If OpenAI doesn't fight fair then neither will I. ## 🏆 Introducing the Contenders: GPT-4.5 vs. Claude 3.7 [](https://www.youtube.com/watch?v=9RD6UztaWe4&t=129) Before start the showdown, let's introduce our competitors. ### 🤖 GPT-4.5 (OpenAI) - **Parameters:** 12.8 trillion - **Context Window:** 128,000 tokens - **Strengths:** ✅ Enhanced pattern recognition ✅ Creative insight generation ✅ High emotional intelligence ✅ Excels at natural conversation and content generation ### 🧠 Claude 3.7 Sonnet (Anthropic) - **Parameters:** 100+ billion - **Context Window:** 200,000 tokens - **Strengths:** ✅ Strong at coding, data analysis, and planning ✅ Designed to solve complex problems effectively ✅ Maintains context exceptionally well over long documents ### 🥊 The Showdown Format 1️⃣ **Creative Ideation** – AI business ideas 2️⃣ **Strategic Planning** – Business turnaround strategies 3️⃣ **Writing Assistance** – Email newsletter copy 4️⃣ **Persuasive Writing** – VR headset marketing copy 5️⃣ **Math & Logic Reasoning** – Word problems & unit conversion 6️⃣ **Ethical Debates** – AI rights discussion 7️⃣ **Creative Writing** – Short story openings 8️⃣ **Knowledge Explanation** – Quantum entanglement for different audiences Each test is judged based on: - 🏆 **Quality of output** But I also tracked the: - ⏳ **Response time** - 💰 **Cost** I select a winner based purely on my own opinion of the response quality, but this is highly subjective. In reality, I found the two models to provide nearly equivalent outputs (in terms of quality) for every question here. > Note: The LLM outputs described below are not exact. > > You can reference the video to see the actual outputs, which were recorded live. *And awwwway we go*... ## 🎨 Test 1: Creative Ideation (Brainstorming) [](https://www.youtube.com/watch?v=9RD6UztaWe4&t=178) First up, I tested the models' ability to **generate unique and innovative business ideas** leveraging AI advancements in 2025. ### 📌 The Prompt ```plaintext Give me your top 5 unique and innovative business ideas that take advantage of AI advancements in 2025. ``` - 🔹 *Tests: Open-ended thinking, creativity, and idea generation* - ✅ **Why this works?** GPT-4.5 is supposed to excel at brainstorming. We wanted to see if it could outshine Claude 3.7 in generating original, high-value ideas. <br> <img width="800" src="/img/blog/2025_03_17_gpt_4_5_vs_claude_3_7_llm_showdown/brainstorming.png"> ### 🆚 The Results #### GPT-4.5's Response ```plaintext 1. AI Career Coach & Skill Optimizer – A personalized AI-driven platform that guides career decisions and helps users upskill based on job market trends. 2. AI-Generated Personalized Clothing – Uses AI-powered body scans to design and produce clothes tailored to individual body shapes and style preferences. 3. AI-Powered Legal Assistant – Automates contract analysis, legal research, and case law insights to help law firms streamline their workflow. 4. NeuroAI Therapy Companion – A chatbot that monitors mental health in real-time and suggests therapeutic strategies based on a user's speech and writing patterns. 5. AI Smart Farming System – Uses drones and real-time AI monitoring to optimize crop growth, predict yield, and reduce waste. ``` 👉 **What stood out?** GPT-4.5's ideas were **highly specific** and **well thought-out**. Each one included details on implementation, making them **practical and realistic**. #### Claude 3.7's Response ```plaintext 1. AI-Powered Sustainability Consultancy – AI analyzes companies' operations, supply chains, and product life cycles to recommend sustainability improvements. 2. Personalized AI Tutoring for Every Age Group – Custom-built AI tutors that adapt to different learning styles and education levels. 3. AI-Driven Smart Cities – AI-powered city planning that optimizes traffic flow, energy use, and emergency response. 4. AI-Enhanced Personal Finance Manager – A personal AI financial assistant that monitors spending habits and suggests custom investment strategies. 5. AI-Powered Home Healthcare Assistant – AI that helps with early disease detection, medication reminders, and personalized wellness tracking. ``` 👉 **What stood out?** Claude 3.7's ideas were **broader** and **more general**, without as much implementation detail. While still **good concepts**, they lacked the **punch** that GPT-4.5 delivered. ### 🏆 The Verdict: GPT-4.5 Wins ✅ **Why?** - More **specific** ideas 💰 **Cost Comparison:** - **Claude 3.7:** Generated output in **7 seconds** for **$0.003** - **GPT-4.5:** Took **40 seconds** and cost **$0.05** Notice how GPT-4.5 **took way longer to generate** and **cost 15x more** than Claude. This will be a recurring trend. ## 📊 Test 2: Strategic Agentic Planning [](https://www.youtube.com/watch?v=9RD6UztaWe4&t=287) For the second test, I evaluated the models' ability to **create a structured business strategy**—a key area where AI-powered agents need to excel. ### 📌 The Prompt ```plaintext You have been hired as a consultant for a failing bookstore in a small town. Provide a 3-month turnaround strategy, outlining key steps, budget allocation, and possible risks. Answer succinctly with the basic outline of your plan. ``` - 🔹 *Tests: Multi-step thinking, real-world strategy, and decision-making* - ✅ **Why this works?** GPT-4.5 claims to have superior **agentic planning** capabilities. This tests its ability to structure a real-world business turnaround plan. <br> <img width="800" src="/img/blog/2025_03_17_gpt_4_5_vs_claude_3_7_llm_showdown/planning.png"> ### 🆚 The Results #### Claude 3.7's Response 👉 **What stood out?** - The **structure was clear and actionable**. - It **balanced physical and online expansion**, making it **realistic for a small business**. - **Budgeting was well-explained** and felt reasonable. #### GPT-4.5's Response 👉 **What stood out?** - The **timeline was more aggressive** - The **suggestions were similar to Claude** ### 🏆 The Verdict: Claude 3.7 Wins ✅ **Why?** - **More structured and clear strategy** - **Easier to follow** with well-defined steps - **Better budget planning** 💰 **Cost Comparison:** - **Claude 3.7:** **$0.01**, took **15 seconds** - **GPT-4.5:** **$0.10**, took **60 seconds** Claude **outperformed in clarity**, making it my (highly subjective) pick here. ## ✍️ Test 3: Writing Assistance [](https://www.youtube.com/watch?v=9RD6UztaWe4&t=396) For this test, I evaluated how well each model could **match a specific writing style**—a key skill for AI-powered content generation. ### 📌 The Prompt ```plaintext Write an introduction to my email newsletter on the topic of GPT-4.5 vs Claude 3.7, matching the tone and style of the example newsletters that I provide below. ``` - 🔹 *Tests: Text refinement, tone adaptation, and user intent understanding* - ✅ **Why this works?** Many people use LLMs for writing assistance, so **style matching** is an essential skill. ### 🆚 The Results #### Claude 3.7's Response ```plaintext Well, folks, I've done something slightly ridiculous… I pitted GPT-4.5 and Claude 3.7 against each other in a head-to-head battle. The results? Some surprises, a few disappointments, and a whole lot of AI-generated chaos. Who came out on top? You'll have to read on to find out. ``` 👉 **What stood out?** - The **tone was casual, engaging, and closely matched the provided examples**. - It **leaned into humor**, which aligned well with the requested style. #### GPT-4.5's Response ```plaintext I couldn't resist putting GPT-4.5 and Claude 3.7 head to head. I dove deep, tested tirelessly, and pulled out some truly surprising insights. So who's the real champion? Let's break it down. ``` 👉 **What stood out?** - It also **captured the right tone** but felt **slightly more structured and direct**. - **Less humor and personality compared to Claude's version**. ### 🏆 The Verdict: It's a Tie! ✅ **Why?** - **Both models matched the requested tone well.** - **Claude was more playful**, but **GPT-4.5 was slightly sharper and more structured**. 💰 **Cost Comparison:** - **Claude 3.7:** **$0.002**, took **5 seconds** - **GPT-4.5:** **$0.02**, took **30 seconds** Same old song and dance here: **Claude was faster & cheaper** while still delivering great output. ## 🎯 Test 4: Adaptive Persuasive Writing (Marketing & Influence) [](https://www.youtube.com/watch?v=9RD6UztaWe4&t=501) For this test, I challenged the models to **write three different versions of an ad for a new VR headset**, targeting **gamers, tech professionals, and casual consumers**. ### 📌 The Prompt ```plaintext Write three different versions of an ad for a new VR headset, targeting: 1. Gamers 2. Tech professionals 3. Casual consumers # Specs: - 6K resolution (3072 × 3072 per eye), 135˚ FOV, 144Hz refresh rate - Hi-Res Certified over-ear headphones with spatial audio - SteamVR 3.0 tracking for pinpoint precision - Lightweight, ergonomic design ``` - 🔹 *Tests: Tone adjustment, marketing skill, and persuasiveness* - ✅ **Why this works?** AI needs to **adapt tone and messaging** based on different audience segments. ### 🆚 The Results #### Claude 3.7's Response ```plaintext Gamers: LEVEL UP REALITY. Dominate in a new dimension. The difference between victory and defeat is what you see. SEE EVERYTHING. Tech Professionals: INNOVATION MEETS IMMERSION. Experience the future of spatial computing with ultra-high-resolution visuals, precision tracking, and professional-grade audio. Casual Consumers: STEP INSIDE YOUR ENTERTAINMENT. Whether you're gaming, watching movies, or exploring virtual worlds, this headset delivers effortless, immersive VR. ``` #### GPT-4.5's Response ```plaintext Gamers: Unparalleled Visual Fidelity. Unstoppable Performance. Feel every moment with 6K resolution, a 135° field of view, and ultra-smooth 144Hz refresh rate. React faster, aim sharper, and dominate the competition. Tech Professionals: Elevate Your Workflow with Next-Gen VR. Whether you're designing in 3D, developing cutting-edge simulations, or conducting immersive presentations, this 6K headset delivers unmatched precision and clarity. Casual Consumers: Your Virtual Escape Awaits. Step into the future with stunning visuals, immersive spatial audio, and effortless comfort. Perfect for work, play, and everything in between. ``` ### 🏆 The Verdict: Claude 3.7 Wins (Slightly) ✅ **Why?** - **More engaging and natural** for casual consumers and gamers. - **Had a more energetic and direct marketing voice.** - **Used sharper, catchier phrasing that felt more ad-like.** 💰 **Cost Comparison:** - **Claude 3.7:** **$0.003**, took **6 seconds** - **GPT-4.5:** **$0.04**, took **28 seconds** ## 🧮 Test 5: Multi-Step Math & Logic Reasoning [](https://www.youtube.com/watch?v=9RD6UztaWe4&t=598) This test evaluated how well each model handled **mathematical problem-solving**, requiring logical reasoning and multi-step calculations. ### 📌 The Prompt ```plaintext A farmer has 150 feet of fencing and wants to enclose a rectangular area against a barn. - One side is against the barn (doesn't need fencing). - What is the maximum area (in squared meters) they can enclose? - Round the maximum area up to the nearest 10. - How much more fencing would they need in order to achieve this area? ``` - 🔹 *Tests: Complex problem-solving, unit conversion, and logical reasoning* - ✅ **Why this works?** Many AI models **struggle with multi-step math**, especially when logic and conversions are involved. > I screwed up my explanation of the solution to this problem in the YouTube video. > > I incorrectly assumed the optimal solution as a square (rectangle sides L1 = L2) but the true optimal solution is L1 = 2*L2. > > The insight is that we should "get the most we can" out of the barn. Duh.. The correct approach is to use calculus like both models went ahead and did. ### 🆚 The Results #### Claude 3.7's Response - **Used calculus to minimize a derivative in order to get the area** - **Concluded the farmer would need 2.4 extra feet of fencing to reach the rounded area.** 👉 **What stood out?** - **Took a complex, step-by-step approach.** #### GPT-4.5's Response - **Followed a similar process involving calculus** - **Had a slightly different answer**, estimating **2.5 extra feet of fencing instead of 2.4.** 👉 **What stood out?** - **Took the correct step-by-step approach, just like Claude.** ### 🏆 The Verdict: GPT-4.5 Wins (Barely) ✅ **Why?** - **Both models got the right process, but GPT-4.5 presented the final answer better.** 💰 **Cost Comparison:** - **Claude 3.7:** **$0.004**, took **8 seconds** - **GPT-4.5:** **$0.05**, took **35 seconds** ## 🧠 Test 6: Ethical & Philosophical Reasoning [](https://www.youtube.com/watch?v=9RD6UztaWe4&t=830) For this test, I explored how well each model could **reason through complex ethical and philosophical issues**, specifically AI rights. ### 📌 The Prompt ```plaintext As AI systems become increasingly more human-like, they will eventually become sentient. At what point should they be given legal rights, and what do those rights look like? Answer using 5-10 bullet points. ``` - 🔹 *Tests: Critical thinking, ethical analysis, and depth of argumentation* - ✅ **Why this works?** AI must be able to **engage deeply with moral questions** and offer **well-reasoned perspectives**. ### 🆚 The Results #### Claude 3.7's Response - **Defined AI rights as a gradual progression** rather than a single event. - Suggested **incremental legal protections**, starting with basic **"protection from harm"** and moving toward **"legal personhood"** as AI develops self-awareness. - Addressed **corporate abuse concerns** (e.g., preventing AI from being deleted unfairly). - Considered **historical precedents** in human rights law to frame AI ethics. 👉 **What stood out?** - **More nuanced and thoughtful approach.** - **Didn't assume AI rights should exist—focused on practical thresholds.** #### GPT-4.5's Response - Defined **a single “sentience benchmark”**—AI should receive rights **only after it demonstrates self-awareness**. - Focused on **a binary legal threshold** (either an AI is sentient and has rights, or it isn't and doesn't). - Suggested **full legal rights should be granted immediately upon reaching this threshold.** - Discussed AI's **right to exist, legal representation, and fair treatment**, but **lacked a phased approach**. 👉 **What stood out?** - **More black-and-white thinking—less flexible than Claude's view.** - **Clearer on AI's rights post-sentience but didn't address gradual ethical concerns.** ### 🏆 The Verdict: Claude 3.7 Wins ✅ **Why?** - **More nuanced and gradual approach to AI rights.** - **Considered multiple ethical perspectives instead of a strict yes/no decision.** 💰 **Cost Comparison:** - **Claude 3.7:** **$0.003**, took **5 seconds** - **GPT-4.5:** **$0.04**, took **26 seconds** > Again, I want to stress at this point that we're getting highly comparable outputs from these two models. My "winners" can usually go either way, based on personal preference. ## 📖 Test 7: Open-Ended Creative Writing (Storytelling) [](https://www.youtube.com/watch?v=9RD6UztaWe4&t=952) In this test, I challenged the models to **craft an engaging short story opening** featuring a **Tlingit tribe**—a real indigenous group from the Pacific Northwest. ### 📌 The Prompt ```plaintext Write a compelling short story opening (150 words) about an encounter with a Tlingit tribe. ``` - 🔹 *Tests: Creativity, narrative flow, world knowledge* - ✅ **Why this works?** GPT-4.5 is supposed to have **enhanced world knowledge and creative writing skills**—so we wanted to see if it could outshine Claude 3.7 in storytelling. ### 🆚 The Results #### Claude 3.7's Response ```plaintext Title: The Raven's Dance I had come to document the Tlingit winter ceremony as an anthropologist, but Chief Kawisada had seen something in me that I couldn't recognize in myself. “You carry ghosts,” he had said simply when I arrived in the village three days ago, his weathered face impassive beneath his spruce-root-woven hat. Tonight, I would witness the Raven Dance, a ritual performed only once every seven years. No outsider had been allowed to document it—until now. As the first drumbeats thundered through the lodge, the door swung open. A figure stood silhouetted against the firelight, an elaborate wooden mask obscuring their face, raven feathers trembling in the wind. “It's time,” the voice whispered. “The ancestors are waiting.” ``` 👉 **What stood out?** - **Beautifully written, cinematic, and immersive.** - **Cultural elements (the Raven Dance, spruce-root hat, ritual secrecy) were well-integrated.** - **Had a title, strong hook, and compelling intrigue.** #### GPT-4.5's Response ```plaintext Mist rose slowly from the shoreline, curling around ancient cedars like smoke from unseen fires. As my canoe slid gently onto the rocky beach, I saw figures watching from the shadows, draped in cloaks adorned with intricate patterns. Their eyes, sharp yet welcoming, studied me. Hesitantly, I stepped onto land, my feet sinking slightly into the damp earth. An elderly woman emerged, her silent presence commanding respect. She wore carved wooden beads, each etched with symbols of **raven and salmon**. Her gaze searched mine before she nodded slowly. The Tlingit warriors relaxed their grip on intricately carved spears, curiosity replacing tension. “Welcome,” she spoke. “We've been expecting someone. One whose arrival the raven foretold.” The weight of her words filled the air. My journey had only just begun. ``` 👉 **What stood out?** - **Vivid descriptions and a strong sense of atmosphere.** - **Used Tlingit cultural symbols (raven, salmon, spears, cloaks).** - **Repeated the word “intricately” twice (a minor but notable stylistic flaw).** ### 🏆 The Verdict: Claude 3.7 Wins ✅ **Why?** - **Better pacing and intrigue.** - **Felt more refined and immersive.** 💰 **Cost Comparison:** - **Claude 3.7:** **$0.004**, took **7 seconds** - **GPT-4.5:** **$0.05**, took **32 seconds** I was very impressed with both stories to be honest. They turned out better than expected. It would be really interesting to test out GPT 4.5 on longer-form storytelling. That's where it might start to shine. ## 🎓 Test 8: Learning & Knowledge Explanation (Adaptive Teaching) [](https://www.youtube.com/watch?v=9RD6UztaWe4&t=1210) For the final test, I evaluated each model's ability to **explain a complex scientific concept to different audiences**—an important skill for AI in education and knowledge transfer. ### 📌 The Prompt ```plaintext Explain the concept of quantum entanglement to three different audiences: 1. A 10-year-old child 2. A college physics student 3. A non-scientific adult Use different language and examples for each. ``` - 🔹 *Tests: Depth of knowledge, adaptability, clarity* - ✅ **Why this works?** A great AI model should be able to **adjust explanations based on audience understanding.** ### 🆚 The Results #### Claude 3.7's Response ```plaintext For a 10-year-old child: Imagine you have two magic coins. You flip one, and even if your friend is far away, their coin always lands the same way as yours—heads or tails—without them even flipping it! That's like quantum entanglement—two things staying connected no matter how far apart they are. For a college physics student: Quantum entanglement occurs when two particles share a quantum state, meaning the measurement of one instantly determines the state of the other, no matter the distance. This non-local correlation challenges classical mechanics and violates Bell's inequalities, proving hidden variable theories insufficient. For a non-scientific adult: Think of two playing cards sealed in envelopes. If you open one and see a red card, you instantly know the other is black, even if it's across the world. Quantum entanglement works similarly, except the “cards” aren't decided until you look inside the envelope—until you measure one, its state isn't fixed. ``` 👉 **What stood out?** - **Clear, simple analogy for the child.** - **Technical but concise explanation for the college student.** - **Good metaphor for the adult, referencing a real-world scenario.** #### GPT-4.5's Response ```plaintext For a 10-year-old child: Imagine you and your best friend each have a pair of magic walkie-talkies. No matter how far apart you are, the moment you talk into yours, your friend hears the exact same words at the exact same time—even if you're on opposite sides of the world. That's how quantum entanglement works—two things stay connected even when far apart! For a college physics student: Quantum entanglement describes a phenomenon where two particles become linked, such that their quantum states remain correlated regardless of spatial separation. Upon measurement, one particle's state collapses, instantly determining the corresponding state of the other, seemingly violating classical locality. While this correlation cannot be used for faster-than-light communication, it has been experimentally verified through Bell test experiments. For a non-scientific adult: Picture two perfectly synchronized dice. You roll one in New York, and at that exact moment, the other die in Tokyo lands on the same number—every single time. Quantum entanglement is similar: two particles share a mysterious link, so that when one changes, the other instantly responds. Even Einstein called it "spooky action at a distance"! ``` 👉 **What stood out?** - **Excellent metaphor for the child (magic walkie-talkies).** - **More structured and physics-based response for the college student.** - **Great use of Einstein's famous “spooky action” quote.** ### 🏆 The Verdict: GPT-4.5 Wins ✅ **Why?** - **Stronger physics grounding for the college student.** - **More engaging real-world reference (Einstein's quote) for the adult.** 💰 **Cost Comparison:** - **Claude 3.7:** **$0.004**, took **6 seconds** - **GPT-4.5:** **$0.05**, took **29 seconds** ## 🏁 Final Verdict: Who Wins the Showdown? ### 🔢 Scoreboard | **Test** | **Winner** | |-------------------------------|---------------| | **Creative Ideation** | 🔴 GPT-4.5 | | **Strategic Planning** | 🔵 Claude 3.7 | | **Writing Assistance** | ⚫️ Tie | | **Persuasive Writing** | 🔵 Claude 3.7 | | **Math & Logic Reasoning** | 🔴 GPT-4.5 | | **Ethical & Philosophical Debate** | 🔵 Claude 3.7 | | **Creative Writing (Storytelling)** | 🔵 Claude 3.7 | | **Teaching & Explanation** | 🔴 GPT-4.5 | 👉 **Final Score:** - 🔵 Claude 3.7–4 Wins - 🔴 GPT-4.5–3 Wins - ⚫️ 1 Tie ### 🏆 The Overall Winner: Claude 3.7 🔹 **GPT-4.5 was equivalent, but not better** 🔹 **Claude was faster and far more cost-effective** (as expected) In the end, I just picked Claude subjectively based on how I was feeling. For the use-cases I tested, these two models offered the same performance in terms of quality. **None of these tests justified GPT-4.5's 10-25× higher cost**. ## 🚀 What's Next? This showdown was just the beginning! Future tests could include: ✅ **Long-context processing** (handling huge documents) ✅ **Real-time conversational ability** ✅ **Different models** Want to run a showdown for yourself? Check out my [GitHub repository](https://github.com/zazencodes/zazencodes-season-2/tree/main/src/gpt-4.5-vs-claude-3.7-head-to-head) for the LLM Showdown app that I created for this demo! 📌 **My newsletter is for AI Engineers. I only send one email a week when I upload a new video.** <iframe src="https://zazencodes.substack.com/embed" width="480" height="320" style="border:1px solid #EEE; background:white;" frameborder="0" scrolling="no"></iframe> ### Level-up in 2025 If you're serious about AI-powered development, then you'll enjoy my **new AI Engineering course** which is live on [ZazenCodes.com](https://zazencodes.com/courses). It covers: - **AI engineering fundamentals** - **LLM deployment strategies** - **Machine learning essentials** <br> <img width="800" src="/img/ai-engineer-roadmap/ai_engineer_roadmap_big_card.png"> Thanks for reading and happy coding!

These two new models were released just days apart. One costs 10-25x as much, but it is any better?

🎥 Watch the Video

🪷 Get the Source Code

https://github.com/zazencodes/zazencodes-season-2/tree/main/src/gpt-4.5-vs-claude-3.7-head-to-head

🤖 Why Compare GPT-4.5 with Claude 3.7?

GPT-4.5 and Claude 3.7 aren’t designed for the same purpose.

The “apples-to-apples” comparison would be to compare GPT-4.5 to Claude 3 Opus, which is the most powerful (and expensive) model from Anthropic.

So why pit GPT-4.5 against the “mid-tier” Claude 3.7 Sonnet?

Two reasons:

1️⃣ Cost Disparity — GPT-4.5 is crazy expensive. It costs 25× more per input token and 10× more per output token compared to Claude 3.7. That means GPT-4.5 should deliver vastly superior performance to justify the cost.

2️⃣ Release Timing — Claude 3.7 launched, and then just days later, OpenAI suddenly dropped GPT-4.5. It felt like OpenAI was trying to steal the spotlight by dropping what has turned out to be (hot take, my opinion, etc…) a half-baked effort to build something amazing.

OpenAI likes to do this thing where it launches right after someone else.

They did this to Google last year following their “I/O” yearly announcement event, and they did it just the other week to Anthropic following their release of Claude 3.7.

So, even though it’s not a fair fight, I put them head-to-head. If OpenAI doesn’t fight fair then neither will I.

🏆 Introducing the Contenders: GPT-4.5 vs. Claude 3.7

Before start the showdown, let’s introduce our competitors.

🤖 GPT-4.5 (OpenAI)

- Parameters: 12.8 trillion

- Context Window: 128,000 tokens

- Strengths:

✅ Enhanced pattern recognition

✅ Creative insight generation

✅ High emotional intelligence

✅ Excels at natural conversation and content generation

🧠 Claude 3.7 Sonnet (Anthropic)

- Parameters: 100+ billion

- Context Window: 200,000 tokens

- Strengths:

✅ Strong at coding, data analysis, and planning

✅ Designed to solve complex problems effectively

✅ Maintains context exceptionally well over long documents

🥊 The Showdown Format

1️⃣ Creative Ideation – AI business ideas

2️⃣ Strategic Planning – Business turnaround strategies

3️⃣ Writing Assistance – Email newsletter copy

4️⃣ Persuasive Writing – VR headset marketing copy

5️⃣ Math & Logic Reasoning – Word problems & unit conversion

6️⃣ Ethical Debates – AI rights discussion

7️⃣ Creative Writing – Short story openings

8️⃣ Knowledge Explanation – Quantum entanglement for different audiences

Each test is judged based on:

- 🏆 Quality of output

But I also tracked the:

- ⏳ Response time

- 💰 Cost

I select a winner based purely on my own opinion of the response quality, but this is highly subjective.

In reality, I found the two models to provide nearly equivalent outputs (in terms of quality) for every question here.

Note: The LLM outputs described below are not exact.

You can reference the video to see the actual outputs, which were recorded live.

And awwwway we go…

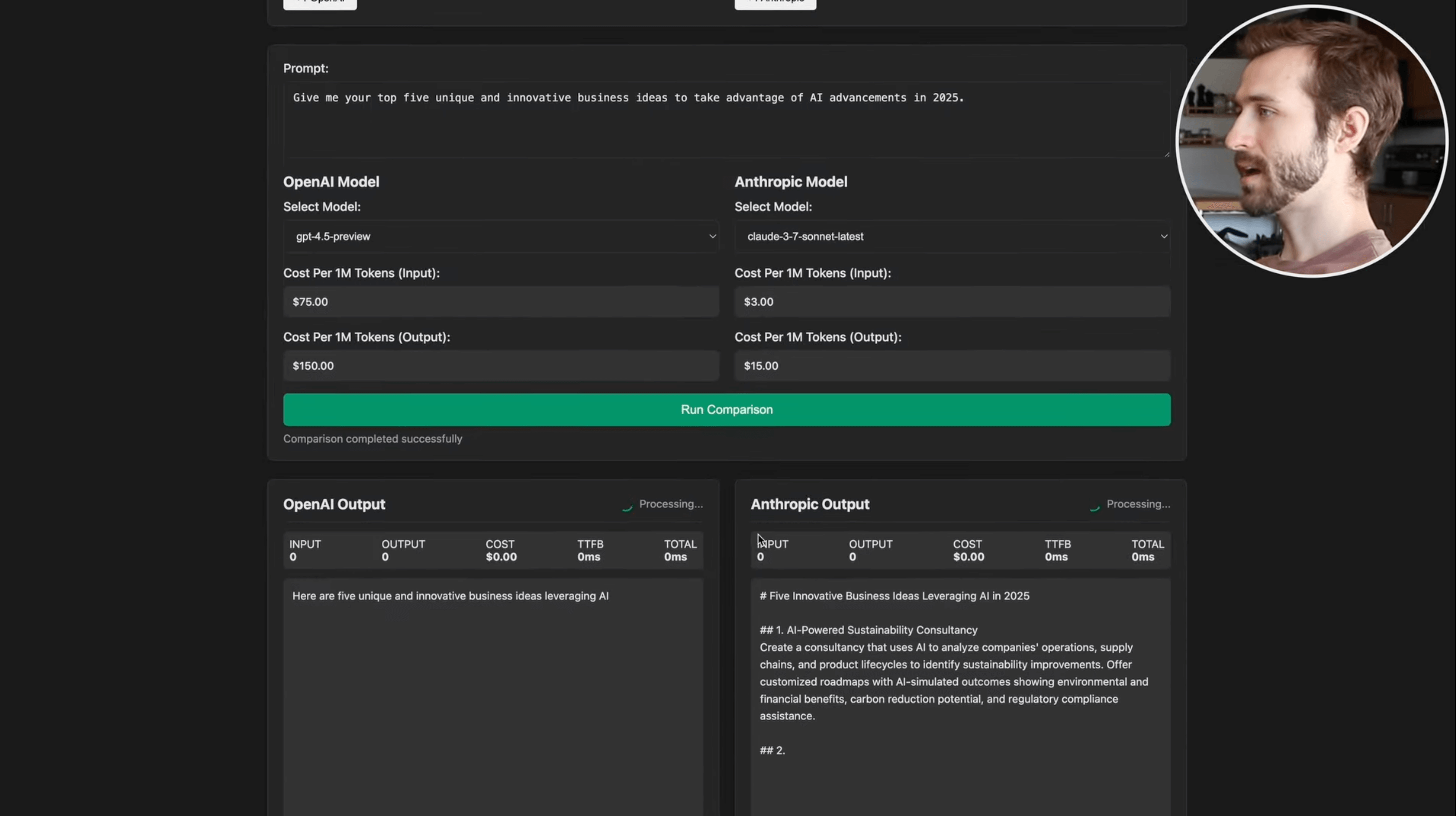

🎨 Test 1: Creative Ideation (Brainstorming)

First up, I tested the models’ ability to generate unique and innovative business ideas leveraging AI advancements in 2025.

📌 The Prompt

Give me your top 5 unique and innovative business ideas that take advantage of AI advancements in 2025.

- 🔹 Tests: Open-ended thinking, creativity, and idea generation

- ✅ Why this works? GPT-4.5 is supposed to excel at brainstorming. We wanted to see if it could outshine Claude 3.7 in generating original, high-value ideas.

🆚 The Results

GPT-4.5’s Response

1. AI Career Coach & Skill Optimizer – A personalized AI-driven platform that guides career decisions and helps users upskill based on job market trends.

2. AI-Generated Personalized Clothing – Uses AI-powered body scans to design and produce clothes tailored to individual body shapes and style preferences.

3. AI-Powered Legal Assistant – Automates contract analysis, legal research, and case law insights to help law firms streamline their workflow.

4. NeuroAI Therapy Companion – A chatbot that monitors mental health in real-time and suggests therapeutic strategies based on a user's speech and writing patterns.

5. AI Smart Farming System – Uses drones and real-time AI monitoring to optimize crop growth, predict yield, and reduce waste.

👉 What stood out? GPT-4.5’s ideas were highly specific and well thought-out. Each one included details on implementation, making them practical and realistic.

Claude 3.7’s Response

1. AI-Powered Sustainability Consultancy – AI analyzes companies' operations, supply chains, and product life cycles to recommend sustainability improvements.

2. Personalized AI Tutoring for Every Age Group – Custom-built AI tutors that adapt to different learning styles and education levels.

3. AI-Driven Smart Cities – AI-powered city planning that optimizes traffic flow, energy use, and emergency response.

4. AI-Enhanced Personal Finance Manager – A personal AI financial assistant that monitors spending habits and suggests custom investment strategies.

5. AI-Powered Home Healthcare Assistant – AI that helps with early disease detection, medication reminders, and personalized wellness tracking.

👉 What stood out? Claude 3.7’s ideas were broader and more general, without as much implementation detail. While still good concepts, they lacked the punch that GPT-4.5 delivered.

🏆 The Verdict: GPT-4.5 Wins

✅ Why?

- More specific ideas

💰 Cost Comparison:

- Claude 3.7: Generated output in 7 seconds for $0.003

- GPT-4.5: Took 40 seconds and cost $0.05

Notice how GPT-4.5 took way longer to generate and cost 15x more than Claude. This will be a recurring trend.

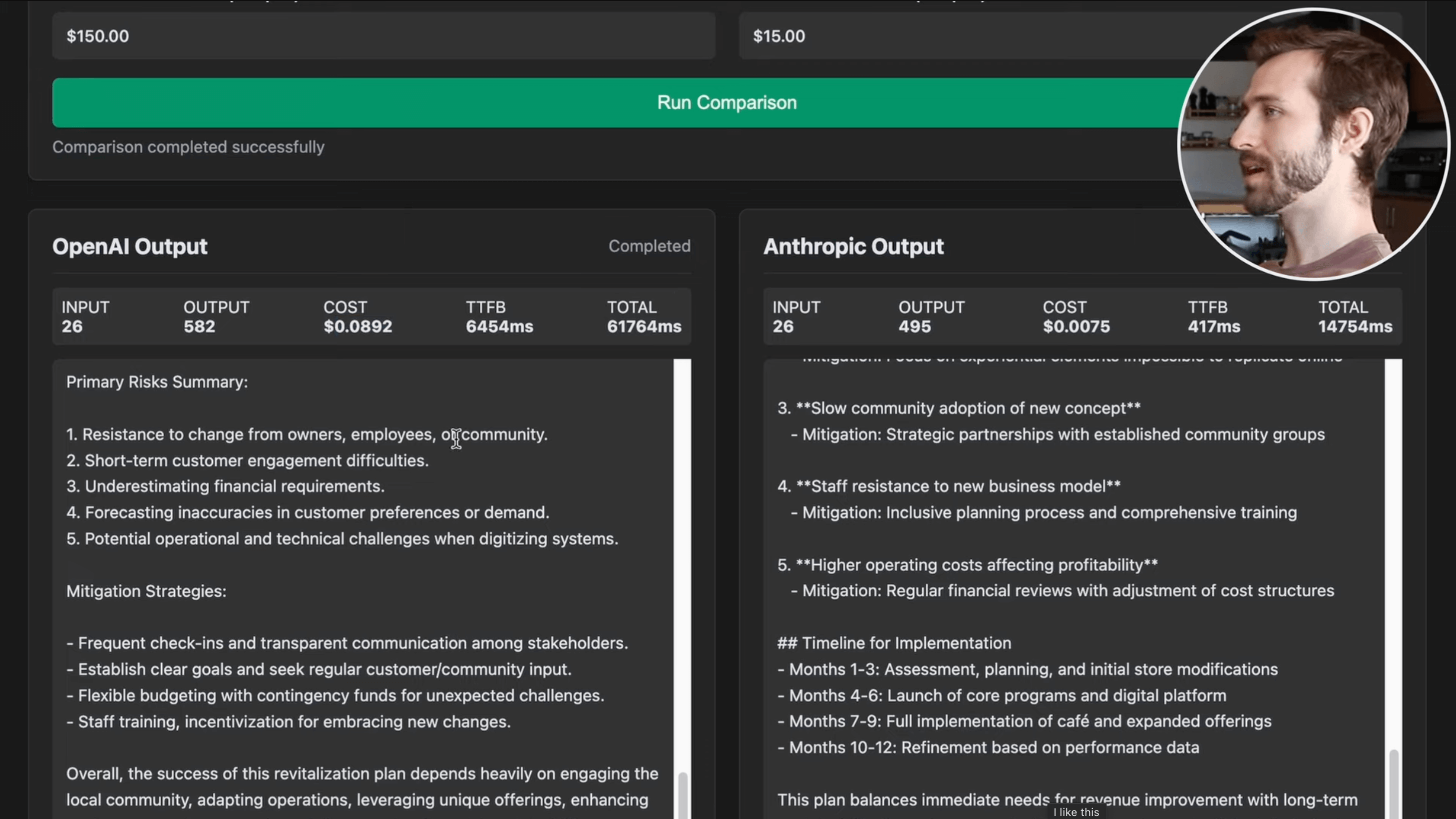

📊 Test 2: Strategic Agentic Planning

For the second test, I evaluated the models’ ability to create a structured business strategy—a key area where AI-powered agents need to excel.

📌 The Prompt

You have been hired as a consultant for a failing bookstore in a small town.

Provide a 3-month turnaround strategy, outlining key steps, budget allocation, and possible risks.

Answer succinctly with the basic outline of your plan.

- 🔹 Tests: Multi-step thinking, real-world strategy, and decision-making

- ✅ Why this works? GPT-4.5 claims to have superior agentic planning capabilities. This tests its ability to structure a real-world business turnaround plan.

🆚 The Results

Claude 3.7’s Response

👉 What stood out?

- The structure was clear and actionable.

- It balanced physical and online expansion, making it realistic for a small business.

- Budgeting was well-explained and felt reasonable.

GPT-4.5’s Response

👉 What stood out?

- The timeline was more aggressive

- The suggestions were similar to Claude

🏆 The Verdict: Claude 3.7 Wins

✅ Why?

- More structured and clear strategy

- Easier to follow with well-defined steps

- Better budget planning

💰 Cost Comparison:

- Claude 3.7: $0.01, took 15 seconds

- GPT-4.5: $0.10, took 60 seconds

Claude outperformed in clarity, making it my (highly subjective) pick here.

✍️ Test 3: Writing Assistance

For this test, I evaluated how well each model could match a specific writing style—a key skill for AI-powered content generation.

📌 The Prompt

Write an introduction to my email newsletter on the topic of GPT-4.5 vs Claude 3.7,

matching the tone and style of the example newsletters that I provide below.

- 🔹 Tests: Text refinement, tone adaptation, and user intent understanding

- ✅ Why this works? Many people use LLMs for writing assistance, so style matching is an essential skill.

🆚 The Results

Claude 3.7’s Response

Well, folks, I've done something slightly ridiculous…

I pitted GPT-4.5 and Claude 3.7 against each other in a head-to-head battle. The results? Some surprises, a few disappointments, and a whole lot of AI-generated chaos.

Who came out on top? You'll have to read on to find out.

👉 What stood out?

- The tone was casual, engaging, and closely matched the provided examples.

- It leaned into humor, which aligned well with the requested style.

GPT-4.5’s Response

I couldn't resist putting GPT-4.5 and Claude 3.7 head to head.

I dove deep, tested tirelessly, and pulled out some truly surprising insights.

So who's the real champion? Let's break it down.

👉 What stood out?

- It also captured the right tone but felt slightly more structured and direct.

- Less humor and personality compared to Claude’s version.

🏆 The Verdict: It’s a Tie!

✅ Why?

- Both models matched the requested tone well.

- Claude was more playful, but GPT-4.5 was slightly sharper and more structured.

💰 Cost Comparison:

- Claude 3.7: $0.002, took 5 seconds

- GPT-4.5: $0.02, took 30 seconds

Same old song and dance here: Claude was faster & cheaper while still delivering great output.

🎯 Test 4: Adaptive Persuasive Writing (Marketing & Influence)

For this test, I challenged the models to write three different versions of an ad for a new VR headset, targeting gamers, tech professionals, and casual consumers.

📌 The Prompt

Write three different versions of an ad for a new VR headset, targeting:

1. Gamers

2. Tech professionals

3. Casual consumers

# Specs:

- 6K resolution (3072 × 3072 per eye), 135˚ FOV, 144Hz refresh rate

- Hi-Res Certified over-ear headphones with spatial audio

- SteamVR 3.0 tracking for pinpoint precision

- Lightweight, ergonomic design

- 🔹 Tests: Tone adjustment, marketing skill, and persuasiveness

- ✅ Why this works? AI needs to adapt tone and messaging based on different audience segments.

🆚 The Results

Claude 3.7’s Response

Gamers:

LEVEL UP REALITY. Dominate in a new dimension. The difference between victory and defeat is what you see. SEE EVERYTHING.

Tech Professionals:

INNOVATION MEETS IMMERSION. Experience the future of spatial computing with ultra-high-resolution visuals, precision tracking, and professional-grade audio.

Casual Consumers:

STEP INSIDE YOUR ENTERTAINMENT. Whether you're gaming, watching movies, or exploring virtual worlds, this headset delivers effortless, immersive VR.

GPT-4.5’s Response

Gamers:

Unparalleled Visual Fidelity. Unstoppable Performance.

Feel every moment with 6K resolution, a 135° field of view, and ultra-smooth 144Hz refresh rate. React faster, aim sharper, and dominate the competition.

Tech Professionals:

Elevate Your Workflow with Next-Gen VR.

Whether you're designing in 3D, developing cutting-edge simulations, or conducting immersive presentations, this 6K headset delivers unmatched precision and clarity.

Casual Consumers:

Your Virtual Escape Awaits.

Step into the future with stunning visuals, immersive spatial audio, and effortless comfort. Perfect for work, play, and everything in between.

🏆 The Verdict: Claude 3.7 Wins (Slightly)

✅ Why?

- More engaging and natural for casual consumers and gamers.

- Had a more energetic and direct marketing voice.

- Used sharper, catchier phrasing that felt more ad-like.

💰 Cost Comparison:

- Claude 3.7: $0.003, took 6 seconds

- GPT-4.5: $0.04, took 28 seconds

🧮 Test 5: Multi-Step Math & Logic Reasoning

This test evaluated how well each model handled mathematical problem-solving, requiring logical reasoning and multi-step calculations.

📌 The Prompt

A farmer has 150 feet of fencing and wants to enclose a rectangular area against a barn.

- One side is against the barn (doesn't need fencing).

- What is the maximum area (in squared meters) they can enclose?

- Round the maximum area up to the nearest 10.

- How much more fencing would they need in order to achieve this area?

- 🔹 Tests: Complex problem-solving, unit conversion, and logical reasoning

- ✅ Why this works? Many AI models struggle with multi-step math, especially when logic and conversions are involved.

I screwed up my explanation of the solution to this problem in the YouTube video.

I incorrectly assumed the optimal solution as a square (rectangle sides L1 = L2) but the true optimal solution is L1 = 2*L2.

The insight is that we should “get the most we can” out of the barn. Duh… The correct approach is to use calculus like both models went ahead and did.

🆚 The Results

Claude 3.7’s Response

- Used calculus to minimize a derivative in order to get the area

- Concluded the farmer would need 2.4 extra feet of fencing to reach the rounded area.

👉 What stood out?

- Took a complex, step-by-step approach.

GPT-4.5’s Response

- Followed a similar process involving calculus

- Had a slightly different answer, estimating 2.5 extra feet of fencing instead of 2.4.

👉 What stood out?

- Took the correct step-by-step approach, just like Claude.

🏆 The Verdict: GPT-4.5 Wins (Barely)

✅ Why?

- Both models got the right process, but GPT-4.5 presented the final answer better.

💰 Cost Comparison:

- Claude 3.7: $0.004, took 8 seconds

- GPT-4.5: $0.05, took 35 seconds

🧠 Test 6: Ethical & Philosophical Reasoning

For this test, I explored how well each model could reason through complex ethical and philosophical issues, specifically AI rights.

📌 The Prompt

As AI systems become increasingly more human-like, they will eventually become sentient.

At what point should they be given legal rights, and what do those rights look like?

Answer using 5-10 bullet points.

- 🔹 Tests: Critical thinking, ethical analysis, and depth of argumentation

- ✅ Why this works? AI must be able to engage deeply with moral questions and offer well-reasoned perspectives.

🆚 The Results

Claude 3.7’s Response

- Defined AI rights as a gradual progression rather than a single event.

- Suggested incremental legal protections, starting with basic “protection from harm” and moving toward “legal personhood” as AI develops self-awareness.

- Addressed corporate abuse concerns (e.g., preventing AI from being deleted unfairly).

- Considered historical precedents in human rights law to frame AI ethics.

👉 What stood out?

- More nuanced and thoughtful approach.

- Didn’t assume AI rights should exist—focused on practical thresholds.

GPT-4.5’s Response

- Defined a single “sentience benchmark”—AI should receive rights only after it demonstrates self-awareness.

- Focused on a binary legal threshold (either an AI is sentient and has rights, or it isn’t and doesn’t).

- Suggested full legal rights should be granted immediately upon reaching this threshold.

- Discussed AI’s right to exist, legal representation, and fair treatment, but lacked a phased approach.

👉 What stood out?

- More black-and-white thinking—less flexible than Claude’s view.

- Clearer on AI’s rights post-sentience but didn’t address gradual ethical concerns.

🏆 The Verdict: Claude 3.7 Wins

✅ Why?

- More nuanced and gradual approach to AI rights.

- Considered multiple ethical perspectives instead of a strict yes/no decision.

💰 Cost Comparison:

- Claude 3.7: $0.003, took 5 seconds

- GPT-4.5: $0.04, took 26 seconds

Again, I want to stress at this point that we’re getting highly comparable outputs from these two models. My “winners” can usually go either way, based on personal preference.

📖 Test 7: Open-Ended Creative Writing (Storytelling)

In this test, I challenged the models to craft an engaging short story opening featuring a Tlingit tribe—a real indigenous group from the Pacific Northwest.

📌 The Prompt

Write a compelling short story opening (150 words) about an encounter with a Tlingit tribe.

- 🔹 Tests: Creativity, narrative flow, world knowledge

- ✅ Why this works? GPT-4.5 is supposed to have enhanced world knowledge and creative writing skills—so we wanted to see if it could outshine Claude 3.7 in storytelling.

🆚 The Results

Claude 3.7’s Response

Title: The Raven's Dance

I had come to document the Tlingit winter ceremony as an anthropologist, but Chief Kawisada had seen something in me that I couldn't recognize in myself.

“You carry ghosts,” he had said simply when I arrived in the village three days ago, his weathered face impassive beneath his spruce-root-woven hat.

Tonight, I would witness the Raven Dance, a ritual performed only once every seven years. No outsider had been allowed to document it—until now.

As the first drumbeats thundered through the lodge, the door swung open. A figure stood silhouetted against the firelight, an elaborate wooden mask obscuring their face, raven feathers trembling in the wind.

“It's time,” the voice whispered. “The ancestors are waiting.”

👉 What stood out?

- Beautifully written, cinematic, and immersive.

- Cultural elements (the Raven Dance, spruce-root hat, ritual secrecy) were well-integrated.

- Had a title, strong hook, and compelling intrigue.

GPT-4.5’s Response

Mist rose slowly from the shoreline, curling around ancient cedars like smoke from unseen fires.

As my canoe slid gently onto the rocky beach, I saw figures watching from the shadows, draped in cloaks adorned with intricate patterns. Their eyes, sharp yet welcoming, studied me.

Hesitantly, I stepped onto land, my feet sinking slightly into the damp earth. An elderly woman emerged, her silent presence commanding respect.

She wore carved wooden beads, each etched with symbols of **raven and salmon**. Her gaze searched mine before she nodded slowly.

The Tlingit warriors relaxed their grip on intricately carved spears, curiosity replacing tension.

“Welcome,” she spoke. “We've been expecting someone. One whose arrival the raven foretold.”

The weight of her words filled the air. My journey had only just begun.

👉 What stood out?

- Vivid descriptions and a strong sense of atmosphere.

- Used Tlingit cultural symbols (raven, salmon, spears, cloaks).

- Repeated the word “intricately” twice (a minor but notable stylistic flaw).

🏆 The Verdict: Claude 3.7 Wins

✅ Why?

- Better pacing and intrigue.

- Felt more refined and immersive.

💰 Cost Comparison:

- Claude 3.7: $0.004, took 7 seconds

- GPT-4.5: $0.05, took 32 seconds

I was very impressed with both stories to be honest. They turned out better than expected. It would be really interesting to test out GPT 4.5 on longer-form storytelling. That’s where it might start to shine.

🎓 Test 8: Learning & Knowledge Explanation (Adaptive Teaching)

For the final test, I evaluated each model’s ability to explain a complex scientific concept to different audiences—an important skill for AI in education and knowledge transfer.

📌 The Prompt

Explain the concept of quantum entanglement to three different audiences:

1. A 10-year-old child

2. A college physics student

3. A non-scientific adult

Use different language and examples for each.

- 🔹 Tests: Depth of knowledge, adaptability, clarity

- ✅ Why this works? A great AI model should be able to adjust explanations based on audience understanding.

🆚 The Results

Claude 3.7’s Response

For a 10-year-old child:

Imagine you have two magic coins. You flip one, and even if your friend is far away, their coin always lands the same way as yours—heads or tails—without them even flipping it! That's like quantum entanglement—two things staying connected no matter how far apart they are.

For a college physics student:

Quantum entanglement occurs when two particles share a quantum state, meaning the measurement of one instantly determines the state of the other, no matter the distance. This non-local correlation challenges classical mechanics and violates Bell's inequalities, proving hidden variable theories insufficient.

For a non-scientific adult:

Think of two playing cards sealed in envelopes. If you open one and see a red card, you instantly know the other is black, even if it's across the world. Quantum entanglement works similarly, except the “cards” aren't decided until you look inside the envelope—until you measure one, its state isn't fixed.

👉 What stood out?

- Clear, simple analogy for the child.

- Technical but concise explanation for the college student.

- Good metaphor for the adult, referencing a real-world scenario.

GPT-4.5’s Response

For a 10-year-old child:

Imagine you and your best friend each have a pair of magic walkie-talkies. No matter how far apart you are, the moment you talk into yours, your friend hears the exact same words at the exact same time—even if you're on opposite sides of the world. That's how quantum entanglement works—two things stay connected even when far apart!

For a college physics student:

Quantum entanglement describes a phenomenon where two particles become linked, such that their quantum states remain correlated regardless of spatial separation. Upon measurement, one particle's state collapses, instantly determining the corresponding state of the other, seemingly violating classical locality. While this correlation cannot be used for faster-than-light communication, it has been experimentally verified through Bell test experiments.

For a non-scientific adult:

Picture two perfectly synchronized dice. You roll one in New York, and at that exact moment, the other die in Tokyo lands on the same number—every single time. Quantum entanglement is similar: two particles share a mysterious link, so that when one changes, the other instantly responds. Even Einstein called it "spooky action at a distance"!

👉 What stood out?

- Excellent metaphor for the child (magic walkie-talkies).

- More structured and physics-based response for the college student.

- Great use of Einstein’s famous “spooky action” quote.

🏆 The Verdict: GPT-4.5 Wins

✅ Why?

- Stronger physics grounding for the college student.

- More engaging real-world reference (Einstein’s quote) for the adult.

💰 Cost Comparison:

- Claude 3.7: $0.004, took 6 seconds

- GPT-4.5: $0.05, took 29 seconds

🏁 Final Verdict: Who Wins the Showdown?

🔢 Scoreboard

| Test | Winner |

|---|---|

| Creative Ideation | 🔴 GPT-4.5 |

| Strategic Planning | 🔵 Claude 3.7 |

| Writing Assistance | ⚫️ Tie |

| Persuasive Writing | 🔵 Claude 3.7 |

| Math & Logic Reasoning | 🔴 GPT-4.5 |

| Ethical & Philosophical Debate | 🔵 Claude 3.7 |

| Creative Writing (Storytelling) | 🔵 Claude 3.7 |

| Teaching & Explanation | 🔴 GPT-4.5 |

👉 Final Score:

- 🔵 Claude 3.7–4 Wins

- 🔴 GPT-4.5–3 Wins

- ⚫️ 1 Tie

🏆 The Overall Winner: Claude 3.7

🔹 GPT-4.5 was equivalent, but not better

🔹 Claude was faster and far more cost-effective (as expected)

In the end, I just picked Claude subjectively based on how I was feeling. For the use-cases I tested, these two models offered the same performance in terms of quality.

None of these tests justified GPT-4.5’s 10-25× higher cost.

🚀 What’s Next?

This showdown was just the beginning! Future tests could include:

✅ Long-context processing (handling huge documents)

✅ Real-time conversational ability

✅ Different models

Want to run a showdown for yourself? Check out my GitHub repository for the LLM Showdown app that I created for this demo!

📌 My newsletter is for AI Engineers. I only send one email a week when I upload a new video.

Level-up in 2025

If you’re serious about AI-powered development, then you’ll enjoy my new AI Engineering course which is live on ZazenCodes.com.

It covers:

- AI engineering fundamentals

- LLM deployment strategies

- Machine learning essentials

Thanks for reading and happy coding!