The awesome power of an LLM in your terminal

Alexander Galea

January 15, 2025

Use more keyboards (and less mouses) with this terminal LLM tool. You can do stuff like ```bash llm 'what is the meaning of life?' ``` And get the answers you’ve been searching for without even opening a browser. Here’s another useful way to use this tool: ```bash git ls-files | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' | llm 'generate documentation for this codebase' ``` I break this one down below, and on YouTube. <iframe width="800" height="450" src="https://www.youtube.com/embed/VL2TmuDJXhE" frameborder="0" allowfullscreen></iframe> ### 🎥 Watch the Video https://www.youtube.com/watch?v=VL2TmuDJXhE ### 🪷 Get the Source Code https://github.com/zazencodes/zazencodes-season-2/tree/main/src/terminal-llm-tricks ## What is `llm`? > A CLI utility and Python library for interacting with Large Language Models, both via remote APIs and models that can be installed and run on your own machine. Here's a link to the source code for the `llm` project: https://github.com/simonw/llm ## Installation and Setup [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=40) ### Install the LLM CLI Tool I installed the llm tool with pipx. Here's instructions for MacOS: ```bash # Install pipx, if you don't have it yet brew install pipx # Install using pip pipx install llm ``` ### Set Up OpenAI API Key Since `llm` relies by default on OpenAI’s models, you’ll need to set up an API key. Add this line to your shell configuration file (`~/.bashrc`, `~/.zshrc`, or `~/.bash_profile`): ```bash export OPENAI_API_KEY=your_openai_api_key_here ``` Once you've saved the file, reload your shell or open a new session. ```bash source ~/.zshrc # or source ~/.bashrc ``` ### Monitor API Usage The `llm` CLI does not output usage statistics, so you should keep an eye on your OpenAI account's usage page to avoid unexpected charges. ## Basic Usage [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=117) Once installed, you can start using `llm` immediately. Here are some core commands to get started: ### Check Available Models ```bash llm models ``` This lists available OpenAI models, such as `gpt-4o` or `gpt-4o-mini`, that you can use. ### Basic Prompting ```bash llm "Tell me something I'll never forget." ``` ### Continuing a Conversation Using the `-c` flag, you can continue a conversation rather than starting a new one: ```bash llm "Explain quantum entanglement." llm -c "Summarize that in one sentence." ``` ### View Conversation History ```bash llm logs ``` This displays previous interactions, allowing you to track past queries. ## CLI Tools and Practical Use Cases [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=180) One of the most exciting aspects of using an LLM in the terminal is **seamless integration** with command-line tools. Whether you're working with system utilities, parsing files, or troubleshooting issues, `llm` can act as your real-time AI assistant. ### Getting Help with Linux Commands This is my favorite use-case. For example: ```bash llm "Linux print date. Only output the command." ``` **Output:** ```bash date ``` Want a timestamp instead? ```bash llm -c "As a timestamp." ``` **Output:** ```bash date +%s ``` ### Understanding File Permissions With the unix piping utility, you can use `llm` to translate terminal outputs into human-readable format. For example, understanding the output of `ls -l` #### **Command** ```bash ls -l | llm "Output these file permissions in human-readable format line by line." ``` **Example Output:** ``` -rw-r--r-- → Owner can read/write, group can read, others can read. drwxr-xr-x → Directory where owner can read/write/execute, group and others can read/execute. ``` This is an easy way to explain Linux permissions. ### Parsing CSV Data Using AI [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=278) If you have structured data in a CSV file but don’t want to load it into Python or Excel, you can use `llm` to generate a simple Bash command for analysis. #### **Example Prompt** ```bash llm "I have a CSV file like this and I need to determine the smallest date (dt) column. Use a bash command. dt,url,device_name,country,sessions,instances,bounce_sessions,orders,revenue,site_type 20240112,https://example.com/folder/page?num=5,,,2,0,1,,,web 20240209,https://example.com/,,,72,0,29,,,mobile 20240111,https://exmaple.com/page,,,1,0,1,,,web " ``` **Generated Command:** ```bash awk -F ',' 'NR>1 {if (min=="" || $1<min) min=$1} END {print min}' file.csv ``` This lets you quickly extract insights without having to write code from scratch. ### Setting Up a Firewall (UFW) [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=329) Need to configure a firewall rule? Instead of Googling it, just ask: ```bash llm "Give me a UFW command to open port 8081." ``` **Output:** ```bash sudo ufw allow 8081 ``` Follow-up question: ```bash llm -c "Do I need to restart it after?" ``` **Output:** ``` No, UFW automatically applies changes, but you can restart it using: sudo systemctl restart ufw. ``` ### Extracting IPs from Logs [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=360) Analyzing logs sucks. But `llm` can make it suck less. If you're analyzing logs and need to extract the most frequent IP addresses, let `llm` generate a command for you. #### Example Prompt ```bash llm "I have a log file and want to extract all IPv4 addresses that appear more than 5 times." ``` **Generated Command:** ```bash grep -oE '\b([0-9]{1,3}\.){3}[0-9]{1,3}\b' logfile.log | sort | uniq -c | awk '$1 > 5' ``` ## Code Documentation and Commenting One really cool use-case of `llm` is to generate **docstrings, type hints, and even full codebase documentation**. ### Generating Function Docstrings [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=406) For a single file, we can generate docstrings: #### Command: ```bash cat ml_script.py | llm "Generate detailed docstrings and type hints for each function." ``` #### Example Input Code (`ml_script.py`): ```python def calculate_area(width, height): return width * height ``` #### Generated Output: ```python def calculate_area(width: float, height: float) -> float: """ Calculate the area of a rectangle. Parameters: width (float): The width of the rectangle. height (float): The height of the rectangle. Returns: float: The computed area of the rectangle. """ return width * height ``` ### Documenting an Entire Codebase [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=508) For larger projects, we can analyze the entire codebase to create documentation. #### Step 1: Find All Python Files ```bash find . -name '*.py' ``` This lists all Python files in your project. #### Step 2: Extract File Contents with Filenames ```bash find . -name '*.py' | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' ``` This ensures that the LLM receives **both the filename and contents** for better contextual understanding. #### Step 3: Generate Documentation ```bash find . -name '*.py' | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' | llm "Generate documentation for this codebase." ``` ### Filtering Specific Files for Documentation [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=670) If your project includes non-code files, you may want to **manually select** which ones to document. #### Step 1: Save File List to a Text File ```bash git ls-files > files.txt ``` Then, edit `files.txt` and remove unnecessary files. #### Step 2: Generate Documentation for Selected Files ```bash cat files.txt | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' | llm "Generate documentation for this codebase." ``` This allows for **manual curation** while still leveraging AI for documentation. ### Using AI to Format Documentation Properly [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=446) Sometimes `llm` outputs Markdown formatting or unnecessary explanations. If you need **only the code**, you can refine your prompt: ```bash cat ml_script.py | llm "Generate detailed docstrings and type hints for each function. Output only the code." ``` This ensures a **clean output** ready to be inserted into your scripts. With `llm`, you can **automate docstring generation, document entire projects, and improve code readability** with just a few terminal commands. ## Code Refactoring and Migrations [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=773) Refactoring code can be tedious, especially when dealing with **monolithic functions** or **legacy codebases**. #### Refactoring Command: ```bash cat ml_script_messy.py | llm "Refactor this code and add comments to explain it." ``` If this doesn't do the trick, we can continue the conversation with a refined prompt: ```bash llm -c "Refactor into multiple functions with clear responsibilities." ``` ### Migrating Python 2 Code to Python 3 [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=853) AI is really good at migrating legacy Python code. #### Example Input (`py2_script.py` - Python 2 Code): ```python print "Enter your name:" name = raw_input() print "Hello, %s!" % name ``` #### Migration Command: ```bash cat py2_script.py | llm "Convert this to Python 3. Include inline comments for every update you make." ``` #### Generated Python 3 Output (`py2_script_migrated.py`): ```python # Updated print statement to Python 3 syntax print("Enter your name:") # Changed raw_input() to input() for Python 3 compatibility name = input() # Updated string formatting to f-strings print(f"Hello, {name}!") ``` **Key Updates:** - `print` statements now use parentheses. - `raw_input()` replaced with `input()`. - Old-style string formatting (`%`) updated to **f-strings**. ### Piping Migration Output to a File Instead of displaying the migrated script in the terminal, you can **store the updated version** in a new file: ```bash cat py2_script.py | llm "Convert this to Python 3. Only output the code." > py2_script_migrated.py ``` Now, you can review and test the updated file before deploying. ### Fine-Tuning the Migration Process If you notice issues with the conversion, refine your prompt. Example: ```bash llm -c "Ensure all functions have type hints and docstrings." ``` This makes sure the migrated script **follows modern best practices**. ## Debugging Assistance [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=904) Debugging can be one of the most frustrating parts of development. `llm` can help a bit. Specifically: **interpret error messages, find solutions, and even analyze logs**. ### Interpreting Error Messages [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=924) #### Example: Running a Python 2 Script in Python 3 On any modern system with Python installed, this would probably fail (since `python` will invoke some version of Python 3) ```bash python py2_script.py ``` **Error Message:** ``` SyntaxError: Missing parentheses in call to 'print'. Did you mean print("Hello, world")? ``` #### Ask `llm` for Help: ```bash llm "Explain this error and tell me how to fix it. Here's the Python traceback: SyntaxError: Missing parentheses in call to 'print'. Did you mean print(\"Hello, world\")?" ``` #### Generated Explanation and Solution: ``` This error occurs because Python 3 requires print statements to be wrapped in parentheses. Solution: Update your script to use print("message") instead of print "message". ``` ### Generating Test Cases for Your Code [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=964) #### Command: ```bash cat ml_script.py | llm "Generate unit tests for each function." ``` #### Example Output: ```python import unittest from ml_script import calculate_area class TestCalculateArea(unittest.TestCase): def test_normal_values(self): self.assertEqual(calculate_area(5, 10), 50) def test_zero_values(self): self.assertEqual(calculate_area(0, 10), 0) self.assertEqual(calculate_area(5, 0), 0) def test_negative_values(self): self.assertEqual(calculate_area(-5, 10), -50) if __name__ == '__main__': unittest.main() ``` With one command, `llm` **generates a full test suite**. Are they good tests? No --- probably not. But they are better than nothing, right? ### Analyzing Logs for Debugging [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=1042) Analyzing logs is suuuuch a pain that we're going to talk about it again. #### Extracting Errors from Logs First, filter only error lines from your log file: ```bash grep "ERROR" app.log > error_log.txt ``` Then, ask `llm` to **analyze the errors**: ```bash cat error_log.txt | llm "Analyze these logs, summarize the errors, and suggest potential causes." ``` #### Example Output: ``` Summary of Errors: - 503 Service Unavailable: Possible connectivity issue with the external API. - Duplicate key error in Redis: Consider adding a unique constraint or checking for existing keys before inserting. Suggested Fixes: 1. Implement a retry mechanism for failed API requests. 2. Add error handling to check if a Redis key exists before writing. ``` ### Detecting Repeated Issues in Logs If you want to **find repeated errors**, use this command: ```bash cat app.log | llm "Extract recurring error messages and estimate their frequency." ``` This helps identify the **most common issues** affecting your system. ## Boilerplate Code Generation [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=1095) Starting a new project often requires setting up **boilerplate code**—basic structures, imports, and configurations. Instead of writing this from scratch, `llm` can generate templates for us. ### Generating a FastAPI App [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=1110) Want to spin up a **FastAPI** server quickly? Just ask: #### Command: ```bash llm "Generate boilerplate code for a FastAPI app with a single route. Only output the code." > app.py ``` #### Generated `app.py`: ```python from fastapi import FastAPI app = FastAPI() @app.get("/") def read_root(): return {"message": "Hello, World!"} if __name__ == "__main__": import uvicorn uvicorn.run(app, host="127.0.0.1", port=8000) ``` BAM --- a fully working **FastAPI** app! To run the server: ```bash uvicorn app:app --reload ``` ### Adding More Routes [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=1140) Let’s say you now need a **POST endpoint**. Instead of writing it manually, extend your prompt: ```bash llm -c "Add another route that accepts POST requests." ``` #### Generated Output: ```python from fastapi import FastAPI, Request app = FastAPI() @app.get("/") def read_root(): return {"message": "Hello, World!"} @app.post("/submit") async def submit_data(request: Request): data = await request.json() return {"received": data} if __name__ == "__main__": import uvicorn uvicorn.run(app, host="127.0.0.1", port=8000) ``` ### Generating a Flask App If you prefer Flask, ask: ```bash llm "Generate a minimal Flask app with a single route." ``` #### Generated Output: ```python from flask import Flask app = Flask(__name__) @app.route("/") def home(): return {"message": "Hello, Flask!"} if __name__ == "__main__": app.run(debug=True) ``` To run the Flask server: ```bash python app.py ``` ## Code Explanations [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=1183) Understanding someone else's code (or even your own after a long break) can be challenging. Instead of manually analyzing it line by line, you can use `llm` to **explain code, summarize projects, and break down complex logic** directly in your terminal. ### Explaining a Code Snippet [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=1208) If you encounter an unfamiliar function, let `llm` walk you through it. #### Command: ```bash cat ml_script.py | llm "Walk through this file and explain how it works. Start with a summary, then go line-by-line for the most difficult sections." ``` ### Explaining an Entire Codebase [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=1274) We can apply the same logic to an entire codebase. In this case, we look at all the Python files: #### Step 1: Find All Python Files ```bash find . -name '*.py' ``` #### Step 2: Extract File Contents with Filenames ```bash find . -name '*.py' | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' ``` #### Step 3: Ask `llm` to Explain the Codebase ```bash find . -name '*.py' | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' | llm "Explain this project and summarize the key components." ``` ### Summarizing Open Source Projects [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=1356) Here's a specific example using nanoGPT, an open-source project by Andrej Karpathy. #### Step 1: Clone the Repository ```bash git clone https://github.com/karpathy/nanoGPT cd nanoGPT ``` #### Step 2: Get a Quick Summary from the README ```bash cat README.md | llm "Explain this project in one paragraph using bullet points." ``` ### Providing a Detailed Breakdown [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=1396) To **analyze the full codebase**, we can continue the conversation: #### Step 3: Ask for a More Detailed Explanation ```bash find . -name "*.py" | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' | llm -c "Given these files, explain the overall project structure." ``` ## Final Thoughts and Next Steps [](https://www.youtube.com/watch?v=VL2TmuDJXhE&t=1435) We’ve covered **how to use an LLM in your terminal** to streamline development, improve productivity, and make coding more efficient. Whether you’re **debugging, refactoring, automating documentation, generating test cases, or parsing logs**, `llm` is a powerful addition to your workflow. ### Key Takeaways ✅ **Installation & Setup** – Install via `pip` or `brew` and configure your OpenAI API key. ✅ **Basic Usage** – Run simple prompts, continue conversations, and log queries. ✅ **CLI Productivity** – Generate Linux commands, parse CSV data, set up firewalls, and analyze logs. ✅ **Code Documentation** – Automate docstrings and generate project-wide documentation. ✅ **Refactoring & Migration** – Break down monolithic functions and convert Python 2 to 3. ✅ **Debugging** – Explain error messages, generate unit tests, and analyze logs for recurring issues. ✅ **Boilerplate Code** – Quickly scaffold FastAPI, Flask, and Django projects. ✅ **Code Explanation** – Summarize complex scripts or entire repositories instantly. ### What’s Next? If you enjoyed this workflow, here are **some next steps** to explore: 🔹 **Experiment with Different Models** – Try [free open-source models with Ollama](https://www.youtube.com/watch?v=dS9Vbye-xSY) instead of the default `gpt-4o-mini`. 🔹 **Integrate `llm` into Your Shell Aliases** – Create quick aliases for frequent tasks. ```bash alias explain="llm 'Explain this command:' " alias docgen="find . -name '*.py' | xargs cat | llm 'Generate documentation for this codebase'" ``` ### More AI-Powered Developer Tools If you love **integrated AI tools**, and especially if you're a **Neovim user**, then you might just looove avante.nvim (like I do). This will get you up and running: [Get the Cursor AI experience in Neovim with avante nvim](https://www.youtube.com/watch?v=4kzSV2xctjc) ### Join the Discussion! Have ideas on how to use `llm` more effectively? Slide into my [Discord server](https://discord.gg/e4zVza46CQ) and let me know. Thanks for reading, and **happy coding**! 🚀

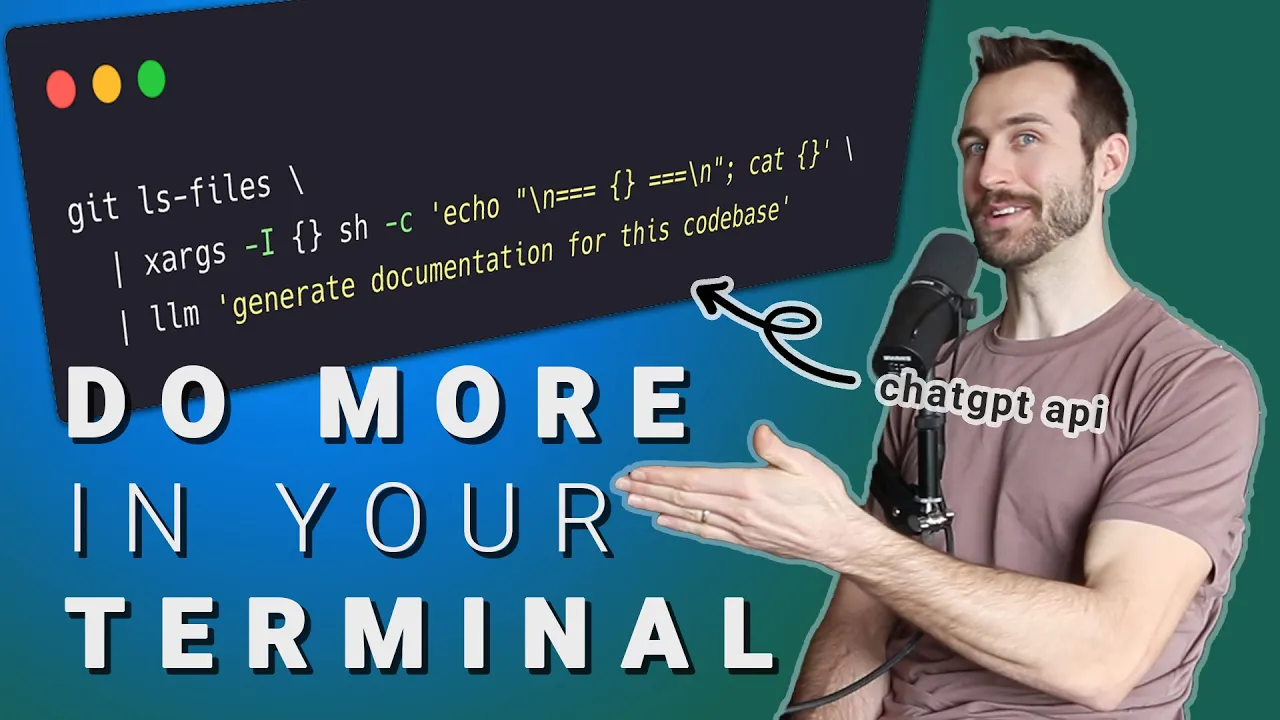

Use more keyboards (and less mouses) with this terminal LLM tool.

You can do stuff like

llm 'what is the meaning of life?'

And get the answers you’ve been searching for without even opening a browser.

Here’s another useful way to use this tool:

git ls-files | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' | llm 'generate documentation for this codebase'

I break this one down below, and on YouTube.

🎥 Watch the Video

https://www.youtube.com/watch?v=VL2TmuDJXhE

🪷 Get the Source Code

https://github.com/zazencodes/zazencodes-season-2/tree/main/src/terminal-llm-tricks

What is llm?

A CLI utility and Python library for interacting with Large Language Models, both via remote APIs and models that can be installed and run on your own machine.

Here’s a link to the source code for the llm project:

Installation and Setup

Install the LLM CLI Tool

I installed the llm tool with pipx. Here’s instructions for MacOS:

# Install pipx, if you don't have it yet

brew install pipx

# Install using pip

pipx install llm

Set Up OpenAI API Key

Since llm relies by default on OpenAI’s models, you’ll need to set up an API key.

Add this line to your shell configuration file (~/.bashrc, ~/.zshrc, or ~/.bash_profile):

export OPENAI_API_KEY=your_openai_api_key_here

Once you’ve saved the file, reload your shell or open a new session.

source ~/.zshrc # or source ~/.bashrc

Monitor API Usage

The llm CLI does not output usage statistics, so you should keep an eye on your OpenAI account’s usage page to avoid unexpected charges.

Basic Usage

Once installed, you can start using llm immediately. Here are some core commands to get started:

Check Available Models

llm models

This lists available OpenAI models, such as gpt-4o or gpt-4o-mini, that you can use.

Basic Prompting

llm "Tell me something I'll never forget."

Continuing a Conversation

Using the -c flag, you can continue a conversation rather than starting a new one:

llm "Explain quantum entanglement."

llm -c "Summarize that in one sentence."

View Conversation History

llm logs

This displays previous interactions, allowing you to track past queries.

CLI Tools and Practical Use Cases

One of the most exciting aspects of using an LLM in the terminal is seamless integration with command-line tools. Whether you’re working with system utilities, parsing files, or troubleshooting issues, llm can act as your real-time AI assistant.

Getting Help with Linux Commands

This is my favorite use-case.

For example:

llm "Linux print date. Only output the command."

Output:

date

Want a timestamp instead?

llm -c "As a timestamp."

Output:

date +%s

Understanding File Permissions

With the unix piping utility, you can use llm to translate terminal outputs into human-readable format.

For example, understanding the output of ls -l

Command

ls -l | llm "Output these file permissions in human-readable format line by line."

Example Output:

-rw-r--r-- → Owner can read/write, group can read, others can read.

drwxr-xr-x → Directory where owner can read/write/execute, group and others can read/execute.

This is an easy way to explain Linux permissions.

Parsing CSV Data Using AI

If you have structured data in a CSV file but don’t want to load it into Python or Excel, you can use llm to generate a simple Bash command for analysis.

Example Prompt

llm "I have a CSV file like this and I need to determine the smallest date (dt) column. Use a bash command.

dt,url,device_name,country,sessions,instances,bounce_sessions,orders,revenue,site_type

20240112,https://example.com/folder/page?num=5,,,2,0,1,,,web

20240209,https://example.com/,,,72,0,29,,,mobile

20240111,https://exmaple.com/page,,,1,0,1,,,web

"

Generated Command:

awk -F ',' 'NR>1 {if (min=="" || $1<min) min=$1} END {print min}' file.csv

This lets you quickly extract insights without having to write code from scratch.

Setting Up a Firewall (UFW)

Need to configure a firewall rule? Instead of Googling it, just ask:

llm "Give me a UFW command to open port 8081."

Output:

sudo ufw allow 8081

Follow-up question:

llm -c "Do I need to restart it after?"

Output:

No, UFW automatically applies changes, but you can restart it using: sudo systemctl restart ufw.

Extracting IPs from Logs

Analyzing logs sucks. But llm can make it suck less.

If you’re analyzing logs and need to extract the most frequent IP addresses, let llm generate a command for you.

Example Prompt

llm "I have a log file and want to extract all IPv4 addresses that appear more than 5 times."

Generated Command:

grep -oE '\b([0-9]{1,3}\.){3}[0-9]{1,3}\b' logfile.log | sort | uniq -c | awk '$1 > 5'

Code Documentation and Commenting

One really cool use-case of llm is to generate docstrings, type hints, and even full codebase documentation.

Generating Function Docstrings

For a single file, we can generate docstrings:

Command:

cat ml_script.py | llm "Generate detailed docstrings and type hints for each function."

Example Input Code (ml_script.py):

def calculate_area(width, height):

return width * height

Generated Output:

def calculate_area(width: float, height: float) -> float:

"""

Calculate the area of a rectangle.

Parameters:

width (float): The width of the rectangle.

height (float): The height of the rectangle.

Returns:

float: The computed area of the rectangle.

"""

return width * height

Documenting an Entire Codebase

For larger projects, we can analyze the entire codebase to create documentation.

Step 1: Find All Python Files

find . -name '*.py'

This lists all Python files in your project.

Step 2: Extract File Contents with Filenames

find . -name '*.py' | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}'

This ensures that the LLM receives both the filename and contents for better contextual understanding.

Step 3: Generate Documentation

find . -name '*.py' | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' | llm "Generate documentation for this codebase."

Filtering Specific Files for Documentation

If your project includes non-code files, you may want to manually select which ones to document.

Step 1: Save File List to a Text File

git ls-files > files.txt

Then, edit files.txt and remove unnecessary files.

Step 2: Generate Documentation for Selected Files

cat files.txt | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' | llm "Generate documentation for this codebase."

This allows for manual curation while still leveraging AI for documentation.

Using AI to Format Documentation Properly

Sometimes llm outputs Markdown formatting or unnecessary explanations. If you need only the code, you can refine your prompt:

cat ml_script.py | llm "Generate detailed docstrings and type hints for each function. Output only the code."

This ensures a clean output ready to be inserted into your scripts.

With llm, you can automate docstring generation, document entire projects, and improve code readability with just a few terminal commands.

Code Refactoring and Migrations

Refactoring code can be tedious, especially when dealing with monolithic functions or legacy codebases.

Refactoring Command:

cat ml_script_messy.py | llm "Refactor this code and add comments to explain it."

If this doesn’t do the trick, we can continue the conversation with a refined prompt:

llm -c "Refactor into multiple functions with clear responsibilities."

Migrating Python 2 Code to Python 3

AI is really good at migrating legacy Python code.

Example Input (py2_script.py - Python 2 Code):

print "Enter your name:"

name = raw_input()

print "Hello, %s!" % name

Migration Command:

cat py2_script.py | llm "Convert this to Python 3. Include inline comments for every update you make."

Generated Python 3 Output (py2_script_migrated.py):

# Updated print statement to Python 3 syntax

print("Enter your name:")

# Changed raw_input() to input() for Python 3 compatibility

name = input()

# Updated string formatting to f-strings

print(f"Hello, {name}!")

Key Updates:

printstatements now use parentheses.raw_input()replaced withinput().- Old-style string formatting (

%) updated to f-strings.

Piping Migration Output to a File

Instead of displaying the migrated script in the terminal, you can store the updated version in a new file:

cat py2_script.py | llm "Convert this to Python 3. Only output the code." > py2_script_migrated.py

Now, you can review and test the updated file before deploying.

Fine-Tuning the Migration Process

If you notice issues with the conversion, refine your prompt. Example:

llm -c "Ensure all functions have type hints and docstrings."

This makes sure the migrated script follows modern best practices.

Debugging Assistance

Debugging can be one of the most frustrating parts of development. llm can help a bit. Specifically: interpret error messages, find solutions, and even analyze logs.

Interpreting Error Messages

Example: Running a Python 2 Script in Python 3

On any modern system with Python installed, this would probably fail (since python will invoke some version of Python 3)

python py2_script.py

Error Message:

SyntaxError: Missing parentheses in call to 'print'. Did you mean print("Hello, world")?

Ask llm for Help:

llm "Explain this error and tell me how to fix it. Here's the Python traceback:

SyntaxError: Missing parentheses in call to 'print'. Did you mean print(\"Hello, world\")?"

Generated Explanation and Solution:

This error occurs because Python 3 requires print statements to be wrapped in parentheses.

Solution: Update your script to use print("message") instead of print "message".

Generating Test Cases for Your Code

Command:

cat ml_script.py | llm "Generate unit tests for each function."

Example Output:

import unittest

from ml_script import calculate_area

class TestCalculateArea(unittest.TestCase):

def test_normal_values(self):

self.assertEqual(calculate_area(5, 10), 50)

def test_zero_values(self):

self.assertEqual(calculate_area(0, 10), 0)

self.assertEqual(calculate_area(5, 0), 0)

def test_negative_values(self):

self.assertEqual(calculate_area(-5, 10), -50)

if __name__ == '__main__':

unittest.main()

With one command, llm generates a full test suite.

Are they good tests? No — probably not. But they are better than nothing, right?

Analyzing Logs for Debugging

Analyzing logs is suuuuch a pain that we’re going to talk about it again.

Extracting Errors from Logs

First, filter only error lines from your log file:

grep "ERROR" app.log > error_log.txt

Then, ask llm to analyze the errors:

cat error_log.txt | llm "Analyze these logs, summarize the errors, and suggest potential causes."

Example Output:

Summary of Errors:

- 503 Service Unavailable: Possible connectivity issue with the external API.

- Duplicate key error in Redis: Consider adding a unique constraint or checking for existing keys before inserting.

Suggested Fixes:

1. Implement a retry mechanism for failed API requests.

2. Add error handling to check if a Redis key exists before writing.

Detecting Repeated Issues in Logs

If you want to find repeated errors, use this command:

cat app.log | llm "Extract recurring error messages and estimate their frequency."

This helps identify the most common issues affecting your system.

Boilerplate Code Generation

Starting a new project often requires setting up boilerplate code—basic structures, imports, and configurations. Instead of writing this from scratch, llm can generate templates for us.

Generating a FastAPI App

Want to spin up a FastAPI server quickly? Just ask:

Command:

llm "Generate boilerplate code for a FastAPI app with a single route. Only output the code." > app.py

Generated app.py:

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

def read_root():

return {"message": "Hello, World!"}

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="127.0.0.1", port=8000)

BAM — a fully working FastAPI app!

To run the server:

uvicorn app:app --reload

Adding More Routes

Let’s say you now need a POST endpoint. Instead of writing it manually, extend your prompt:

llm -c "Add another route that accepts POST requests."

Generated Output:

from fastapi import FastAPI, Request

app = FastAPI()

@app.get("/")

def read_root():

return {"message": "Hello, World!"}

@app.post("/submit")

async def submit_data(request: Request):

data = await request.json()

return {"received": data}

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="127.0.0.1", port=8000)

Generating a Flask App

If you prefer Flask, ask:

llm "Generate a minimal Flask app with a single route."

Generated Output:

from flask import Flask

app = Flask(__name__)

@app.route("/")

def home():

return {"message": "Hello, Flask!"}

if __name__ == "__main__":

app.run(debug=True)

To run the Flask server:

python app.py

Code Explanations

Understanding someone else’s code (or even your own after a long break) can be challenging. Instead of manually analyzing it line by line, you can use llm to explain code, summarize projects, and break down complex logic directly in your terminal.

Explaining a Code Snippet

If you encounter an unfamiliar function, let llm walk you through it.

Command:

cat ml_script.py | llm "Walk through this file and explain how it works. Start with a summary, then go line-by-line for the most difficult sections."

Explaining an Entire Codebase

We can apply the same logic to an entire codebase.

In this case, we look at all the Python files:

Step 1: Find All Python Files

find . -name '*.py'

Step 2: Extract File Contents with Filenames

find . -name '*.py' | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}'

Step 3: Ask llm to Explain the Codebase

find . -name '*.py' | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' | llm "Explain this project and summarize the key components."

Summarizing Open Source Projects

Here’s a specific example using nanoGPT, an open-source project by Andrej Karpathy.

Step 1: Clone the Repository

git clone https://github.com/karpathy/nanoGPT

cd nanoGPT

Step 2: Get a Quick Summary from the README

cat README.md | llm "Explain this project in one paragraph using bullet points."

Providing a Detailed Breakdown

To analyze the full codebase, we can continue the conversation:

Step 3: Ask for a More Detailed Explanation

find . -name "*.py" | xargs -I {} sh -c 'echo "\n=== {} ===\n"; cat {}' | llm -c "Given these files, explain the overall project structure."

Final Thoughts and Next Steps

We’ve covered how to use an LLM in your terminal to streamline development, improve productivity, and make coding more efficient. Whether you’re debugging, refactoring, automating documentation, generating test cases, or parsing logs, llm is a powerful addition to your workflow.

Key Takeaways

✅ Installation & Setup – Install via pip or brew and configure your OpenAI API key.

✅ Basic Usage – Run simple prompts, continue conversations, and log queries.

✅ CLI Productivity – Generate Linux commands, parse CSV data, set up firewalls, and analyze logs.

✅ Code Documentation – Automate docstrings and generate project-wide documentation.

✅ Refactoring & Migration – Break down monolithic functions and convert Python 2 to 3.

✅ Debugging – Explain error messages, generate unit tests, and analyze logs for recurring issues.

✅ Boilerplate Code – Quickly scaffold FastAPI, Flask, and Django projects.

✅ Code Explanation – Summarize complex scripts or entire repositories instantly.

What’s Next?

If you enjoyed this workflow, here are some next steps to explore:

🔹 Experiment with Different Models – Try free open-source models with Ollama instead of the default gpt-4o-mini.

🔹 Integrate llm into Your Shell Aliases – Create quick aliases for frequent tasks.

alias explain="llm 'Explain this command:' "

alias docgen="find . -name '*.py' | xargs cat | llm 'Generate documentation for this codebase'"

More AI-Powered Developer Tools

If you love integrated AI tools, and especially if you’re a Neovim user, then you might just looove avante.nvim (like I do).

This will get you up and running: Get the Cursor AI experience in Neovim with avante nvim

Join the Discussion!

Have ideas on how to use llm more effectively? Slide into my Discord server and let me know.

Thanks for reading, and happy coding! 🚀