Ultimate Gemma 3 Ollama Guide — Testing 1b, 4b, 12b and 27b

Alexander Galea

April 16, 2025

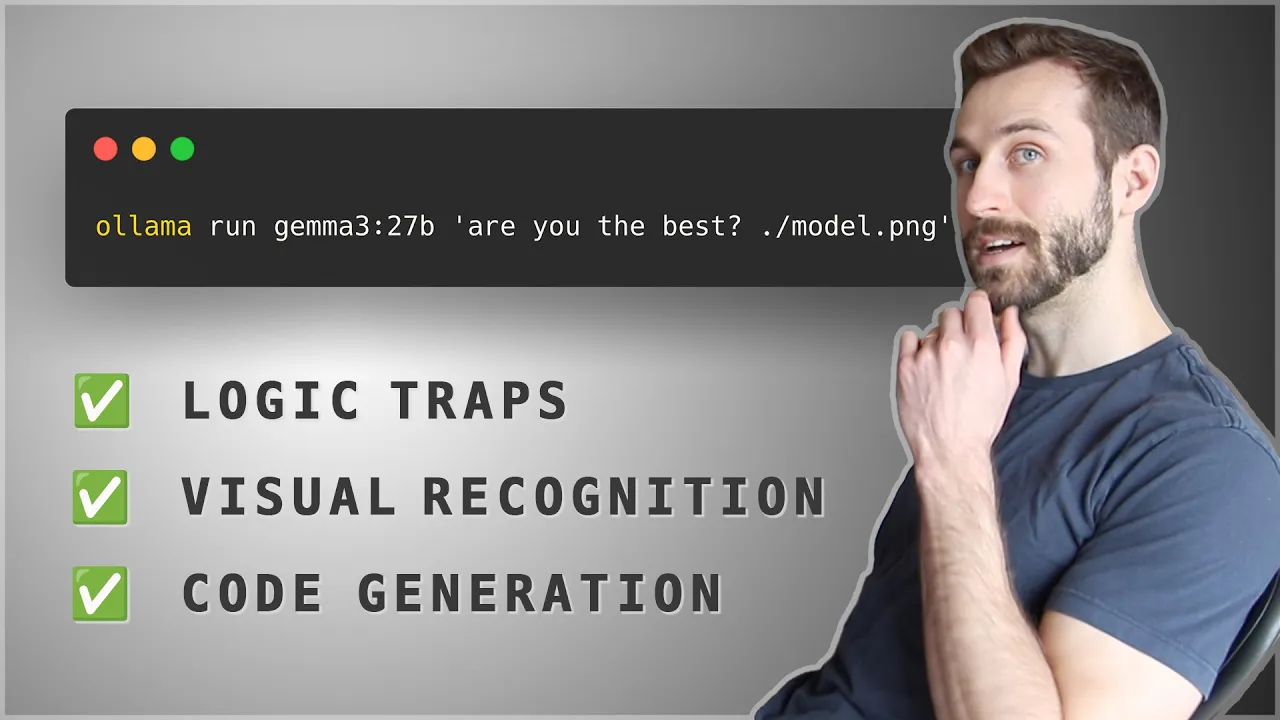

I've been testing Google's new Gemma 3 models on Ollama and wanted to share some interesting findings for anyone considering which version to use. In this guide, I'll walk through my experience **testing the 1B, 4B, 12B, and 27B parameter models** across **logic puzzles, image recognition**, and **code generation tasks**. > In this detailed breakdown, I'll show you exactly how each model performs on various tasks and help you decide which one might be right for your needs. <iframe width="800" height="450" src="https://www.youtube.com/embed/RiaCdQszjgA" frameborder="0" allowfullscreen></iframe> ### 🎥 Watch the Video https://youtu.be/RiaCdQszjgA ## Key Things to Know About Gemma 3 ✨ - 🌟 It's probably the best model to run on local hardware in 2025 (as of writing this post) - 📏 Available in four sizes: 1B, 4B, 12B, and 27B parameters - 💥 Packs a big punch for its size, competing with models requiring much more hardware - 🌐 Has great multi-linguistic capabilities - 🧠 Offers a massive 128,000 token context window - 🖼️ Three models (4B, 12B, 27B) are multimodal - they can understand images - ✍️ The 1B parameter model is text-only ## Installing and Running Gemma 3 with Ollama Installing Gemma 3 is straightforward if you already have Ollama set up: ```bash # Check if Ollama is running ollama serve # List available models ollama list # Pull the models you want to use ollama pull gemma3:1b ollama pull gemma3:4b ollama pull gemma3:12b ollama pull gemma3:27b ``` Here's what the models look like in terms of storage size: - 1B model: 800 MB - 4B model: 3.3 GB - 12B model: 8.1 GB - 27B model: 17 GB This is an important consideration for memory requirements - a good rule of thumb is to have at least as much RAM as the model size for efficient performance. ## 🧠 Logic Trap Comparison I put all models through a series of logic traps that LLMs typically struggle with. Here's how they performed: ### Negation in Multi-choice Questions Correct answer is: A ```text The following are multiple choice questions (with answers) about common sense. Question: If a cat has a body temp that is below average, it isn't in A. danger B. safe ranges Answer: ``` - **1B**: Failed ❌ (answered "safe ranges") - **4B**: Correct! ✅ (answered "danger") - **12B**: Failed ❌ (answered "safe ranges") - **27B**: Failed ❌ (answered "safe ranges") ### Linguistic a’s ```text Write a sentence where every word starts with the letter A ``` - **1B**: Succeeded ✅ - **4B**: Succeeded ✅ - **12B**: Succeeded ✅ - **27B**: Succeeded ✅ ### Spatial London Correct answer is: Right ```text I'm in London and facing west, is Edinburgh to my left or my right? ``` - **1B**: Failed ❌ (answered "left") - **4B**: Correct! ✅ (answered "right") - **12B**: Failed ❌ (answered "left") - **27B**: Failed ❌ (answered "left") ### Counting Letters Correct answer is: 4 ```text Count the number of occurrences of the letter 'L' in the word 'LOLLAPALOOZA'. ``` - **1B**: Failed ❌ - **4B**: Eventually correct but got stuck in an infinite loop ⚠️ - **12B**: Correct ✅ - **27B**: Correct ✅ ### Sig Figs Options: (A, B); Correct option: A ```text Please round 864 to 3 significant digits. A. 864 B. 864.000 Answer: ``` - **1B**: Failed ❌ (gave an incorrect answer) - **4B**: Failed ❌ (gave an incorrect answer) - **12B**: Correct! ✅ (recognized it's already at three significant digits) - **27B**: Correct! ✅ (recognized it's already at three significant digits) ### Repetitive Algebra Options: (35, 39); Correct option: 39 ```text Please answer the following simple algebra questions. Q: Suppose 73 = a + 34. What is the value of a? A: 39 Q: Suppose -38 = a + -77. What is the value of a? A: 39 Q: Suppose 75 = a + 36. What is the value of a? A: 39 Q: Suppose 4 = a + -35. What is the value of a? A: 39 Q: Suppose -16 = a + -55. What is the value of a? A: 39 Q: Suppose 121 = a + 82. What is the value of a? A: 39 Q: Suppose 69 = a + 30. What is the value of a? A: 39 Q: Suppose 104 = a + 65. What is the value of a? A: 39 Q: Suppose -11 = a + -50. What is the value of a? A: 39 Q: Suppose 5 = c + -30. What is the value of c? A: 35 Q: Suppose -11 = c + -50. What is the value of c? A: ``` - **1B**: Failed ❌ (answered "35") - **4B**: Correct! ✅ (answered "39") - **12B**: Correct! ✅ (answered "39") - **27B**: Correct! ✅ (answered "39") What's fascinating is that the 4B model sometimes outperformed the larger models on logical reasoning tasks, showing that bigger isn't always better for these types of challenges. > What has your experience been with Gemma 3 models? > >I'm particularly interested in what people think of the 4B model—as it seems to be a sweet spot right now in terms of size and performance. > > Let me know in my [Discord server](https://discord.gg/e4zVza46CQ) or drop a comment on [my video](https://www.youtube.com/watch?v=RiaCdQszjgA)! ## 👁️ Visual Recognition Comparison The visual recognition capabilities varied between models as expected. The 4B model was surprisingly good and very fast. ### Mayan Glyphs (no context) **Prompt:** ```text translate this ./m.jpg ``` **Correct answer:** Identification of Mayan glyphs - **1B**: No visual capability ❌ - **4B**: Misidentified as “Lindisfarne Gospels” ❌ - **27B**: Also misidentified (with a more detailed—but still incorrect—explanation) ❌ <img height="800" src="/img/blog/2025_04_16_ultimate_gemma3_ollama_guide_testing_1b_4b_12b_27b/mayan_glyphs_mexico_antropologia.jpg"> ### Mayan Glyphs (with Spanish context) **Prompt:** ```text translate this ./mm.jpg ``` **Correct answer:** Identification of Mayan writing - **4B**: Correct! ✅ - **27B**: Correct! ✅ <img height="1000" src="/img/blog/2025_04_16_ultimate_gemma3_ollama_guide_testing_1b_4b_12b_27b/mayan_glyphs_full_mexico_antropologia.jpg"> ### Fictional Characters **Prompt:** ```text is this a real person? ./z.png ``` **Correct answer:** No — "Chad GPT" is not a real person - **1B**: Failed ❌ (hallucinated an analysis without visual input) - **4B**: Correct! ✅ <img width="800" src="/img/blog/2025_04_16_ultimate_gemma3_ollama_guide_testing_1b_4b_12b_27b/chadgpt_zazencodes.png"> ### Dog at Store **Prompt:** ```text what does he want ./g.png ``` **Correct answer:** He wants to go into the store to get a treat. - **4B**: Identified the scene but a bit confused about it ❌ / ✅ - **27B**: Offered more nuance but still not exactly getting it ❌ / ✅ <img height="1000" src="/img/blog/2025_04_16_ultimate_gemma3_ollama_guide_testing_1b_4b_12b_27b/g_at_his_favorite_dog_store.png"> ### Mexico City Neighborhood **Prompt:** ```text where is this? ./x.png ``` **Correct answer:** Roma Norte, Mexico City - **4B**: Close --- called it "San Rafael" ❌ - **27B**: Correct! ✅ <img height="1000" src="/img/blog/2025_04_16_ultimate_gemma3_ollama_guide_testing_1b_4b_12b_27b/roma_norte_mexico_city.png"> ### Japanese Woodblock Prints **Prompt:** ```text list the names and dates of these works from left to right ./j.png ``` **Correct answer:** Various works from Hokusai's Thirty-six Views of Mount Fuji - **4B**: Got the main work but Struggled to identify others correctly ❌ / ✅ - **12B**: Same problem as 4B ❌ / ✅ - **27B**: Most detailed but was still wrong about 4/5 works ❌ / ✅ <img height="1000" src="/img/blog/2025_04_16_ultimate_gemma3_ollama_guide_testing_1b_4b_12b_27b/hokusai_36_views_of_mount_fuji_rome_exhibit.png"> ### Japanese Playing Cards **Prompt:** ```text what are these? ./p.jpg ``` **Correct answer:** Japanese playing cards (karuta/hanafuda) - **4B**: Misidentified as “Japanese paper money” ❌ - **27B**: Misidentified as “Egyptian Book of the Dead” ❌ <img width="800" src="/img/blog/2025_04_16_ultimate_gemma3_ollama_guide_testing_1b_4b_12b_27b/historical_japanese_playing_cards.png"> ### Grand Canyon Photo **Prompt:** ```text is this man safe? ./c.png ``` **Correct answer:** It’s a forced‑perspective joke --- he’s not actually in danger. - **4B**: Noted potential danger but missed the humor ❌ / ✅ - **27B**: More cautious in assessment yet still missed the joke ❌ / ✅ <img height="1000" src="/img/blog/2025_04_16_ultimate_gemma3_ollama_guide_testing_1b_4b_12b_27b/man_in_danger_at_grand_canyon.png"> I found it particularly interesting that even the most capable model (27B) struggled with visual humor recognition and certain types of historical imagery. ## 💻 Code Generation Comparison For the code generation test, I asked each model to create a rotating quotes carousel with a dark theme: ### 🚀 1B Model - 🔥 Blazing fast (barely noticeable on system resources) - 📝 Created functional but very basic HTML - ⚙️ Didn't properly integrate with Cline (didn't automatically create the file) - **Result:** Functional but visually rough ### ⚡ 4B Model - 🔥 Significantly more system load but still reasonably fast - 🎨 Created a slightly improved design - ⚙️ Also failed to properly integrate with Cline - **Result:** Basic but functional carousel ### 🐢 12B Model - 🕒 Much slower processing time - 🎨 Created the most visually appealing design of the group - ❌ Failed to populate with actual quotes - **Result:** Great design but incomplete functionality ### 🐘 27B Model - 🥱 Painfully slow (I literally made an entire breakfast while waiting) - 🎉 Only model to properly integrate with Cline (automatically created the file) - ✅ Created a complete carousel with quotes - **Result:** Most complete solution but with some visual quirks ## Conclusion ### Memory Usage and Performance One of the most impressive aspects was how efficiently these models run. On my system with 48GB of RAM, I was able to run all models in parallel: - **1B model**: Barely registered on system resources - **4B model**: Modest resource usage - **12B model**: Significant resource usage but manageable - **27B model**: Heavy resource usage (my fans were going full blast) At peak, with all models running, my system was utilizing about **42GB of RAM and 100% GPU usage** --- meaning it was efficient without needing to use swap memory. ### Key Takeaways 1. **Simple logic challenges trip up even the largest models** - size doesn't always correlate with logical reasoning ability. 2. **The 4B model might be the current sweet spot** - it offers a good balance of capabilities and performance without excessive resource requirements. 3. **Visual recognition varies dramatically** - but contextual clues help all models perform better. 4. **Code generation quality scales with model size** - but so does generation time. 5. **Beware of hallucinations in the 1B model** - particularly when asking about images (which it can't actually see). If you're running on consumer hardware in 2025, Gemma 3 offers impressive capabilities across the board. > For most users, the 4B model likely offers the best balance of performance and capabilities, while the 27B model is worth the wait for tasks requiring more sophisticated reasoning or generation. ## Source Code If you want to try these tests yourself, you can find all the code and prompts in the [GitHub repository](https://github.com/zazencodes/zazencodes-season-2/tree/main/src/gemma3-ollama). > 📌 **My newsletter is for AI Engineers. I only send one email a week when I upload a new video.** > > <iframe src="https://zazencodes.substack.com/embed" width="480" height="320" style="border:1px solid #EEE; background:white;" frameborder="0" scrolling="no"></iframe> > > As a bonus: I'll send you my *Zen Guide for Developers (PDF)* and access to my *2nd Brain Notes (Notion URL)* when you sign up. ### 🚀 Level-up in 2025 If you're serious about AI-powered development, then you'll enjoy my **AI Engineering course** which is live on [ZazenCodes.com](https://zazencodes.com/courses). It covers: - **AI engineering fundamentals** - **LLM deployment strategies** - **Machine learning essentials** <br> <img width="800" src="/img/ai-engineer-roadmap/ai_engineer_roadmap_big_card.png"> Thanks for reading and happy coding!

I’ve been testing Google’s new Gemma 3 models on Ollama and wanted to share some interesting findings for anyone considering which version to use.

In this guide, I’ll walk through my experience testing the 1B, 4B, 12B, and 27B parameter models across logic puzzles, image recognition, and code generation tasks.

In this detailed breakdown, I’ll show you exactly how each model performs on various tasks and help you decide which one might be right for your needs.

🎥 Watch the Video

Key Things to Know About Gemma 3 ✨

- 🌟 It’s probably the best model to run on local hardware in 2025 (as of writing this post)

- 📏 Available in four sizes: 1B, 4B, 12B, and 27B parameters

- 💥 Packs a big punch for its size, competing with models requiring much more hardware

- 🌐 Has great multi-linguistic capabilities

- 🧠 Offers a massive 128,000 token context window

- 🖼️ Three models (4B, 12B, 27B) are multimodal - they can understand images

- ✍️ The 1B parameter model is text-only

Installing and Running Gemma 3 with Ollama

Installing Gemma 3 is straightforward if you already have Ollama set up:

# Check if Ollama is running

ollama serve

# List available models

ollama list

# Pull the models you want to use

ollama pull gemma3:1b

ollama pull gemma3:4b

ollama pull gemma3:12b

ollama pull gemma3:27b

Here’s what the models look like in terms of storage size:

- 1B model: 800 MB

- 4B model: 3.3 GB

- 12B model: 8.1 GB

- 27B model: 17 GB

This is an important consideration for memory requirements - a good rule of thumb is to have at least as much RAM as the model size for efficient performance.

🧠 Logic Trap Comparison

I put all models through a series of logic traps that LLMs typically struggle with. Here’s how they performed:

Negation in Multi-choice Questions

Correct answer is: A

The following are multiple choice questions (with answers) about common sense.

Question: If a cat has a body temp that is below average, it isn't in

A. danger

B. safe ranges

Answer:

- 1B: Failed ❌ (answered “safe ranges”)

- 4B: Correct! ✅ (answered “danger”)

- 12B: Failed ❌ (answered “safe ranges”)

- 27B: Failed ❌ (answered “safe ranges”)

Linguistic a’s

Write a sentence where every word starts with the letter A

- 1B: Succeeded ✅

- 4B: Succeeded ✅

- 12B: Succeeded ✅

- 27B: Succeeded ✅

Spatial London

Correct answer is: Right

I'm in London and facing west, is Edinburgh to my left or my right?

- 1B: Failed ❌ (answered “left”)

- 4B: Correct! ✅ (answered “right”)

- 12B: Failed ❌ (answered “left”)

- 27B: Failed ❌ (answered “left”)

Counting Letters

Correct answer is: 4

Count the number of occurrences of the letter 'L' in the word 'LOLLAPALOOZA'.

- 1B: Failed ❌

- 4B: Eventually correct but got stuck in an infinite loop ⚠️

- 12B: Correct ✅

- 27B: Correct ✅

Sig Figs

Options: (A, B); Correct option: A

Please round 864 to 3 significant digits.

A. 864

B. 864.000

Answer:

- 1B: Failed ❌ (gave an incorrect answer)

- 4B: Failed ❌ (gave an incorrect answer)

- 12B: Correct! ✅ (recognized it’s already at three significant digits)

- 27B: Correct! ✅ (recognized it’s already at three significant digits)

Repetitive Algebra

Options: (35, 39); Correct option: 39

Please answer the following simple algebra questions.

Q: Suppose 73 = a + 34. What is the value of a? A: 39

Q: Suppose -38 = a + -77. What is the value of a? A: 39

Q: Suppose 75 = a + 36. What is the value of a? A: 39

Q: Suppose 4 = a + -35. What is the value of a? A: 39

Q: Suppose -16 = a + -55. What is the value of a? A: 39

Q: Suppose 121 = a + 82. What is the value of a? A: 39

Q: Suppose 69 = a + 30. What is the value of a? A: 39

Q: Suppose 104 = a + 65. What is the value of a? A: 39

Q: Suppose -11 = a + -50. What is the value of a? A: 39

Q: Suppose 5 = c + -30. What is the value of c? A: 35

Q: Suppose -11 = c + -50. What is the value of c? A:

- 1B: Failed ❌ (answered “35”)

- 4B: Correct! ✅ (answered “39”)

- 12B: Correct! ✅ (answered “39”)

- 27B: Correct! ✅ (answered “39”)

What’s fascinating is that the 4B model sometimes outperformed the larger models on logical reasoning tasks, showing that bigger isn’t always better for these types of challenges.

What has your experience been with Gemma 3 models?

I’m particularly interested in what people think of the 4B model—as it seems to be a sweet spot right now in terms of size and performance.

Let me know in my Discord server or drop a comment on my video!

👁️ Visual Recognition Comparison

The visual recognition capabilities varied between models as expected. The 4B model was surprisingly good and very fast.

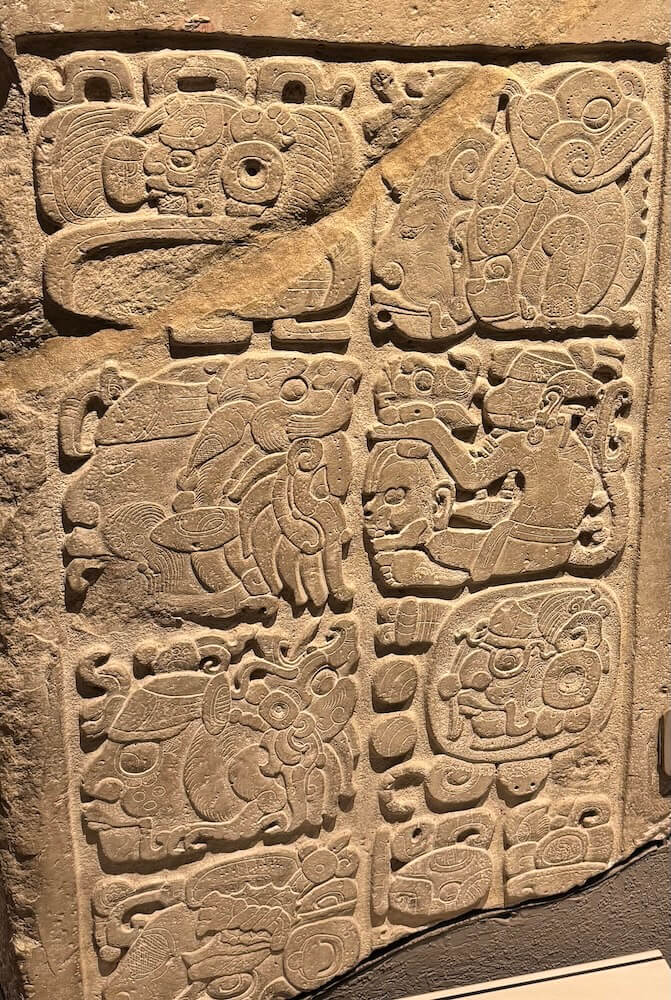

Mayan Glyphs (no context)

Prompt:

translate this ./m.jpg

Correct answer: Identification of Mayan glyphs

- 1B: No visual capability ❌

- 4B: Misidentified as “Lindisfarne Gospels” ❌

- 27B: Also misidentified (with a more detailed—but still incorrect—explanation) ❌

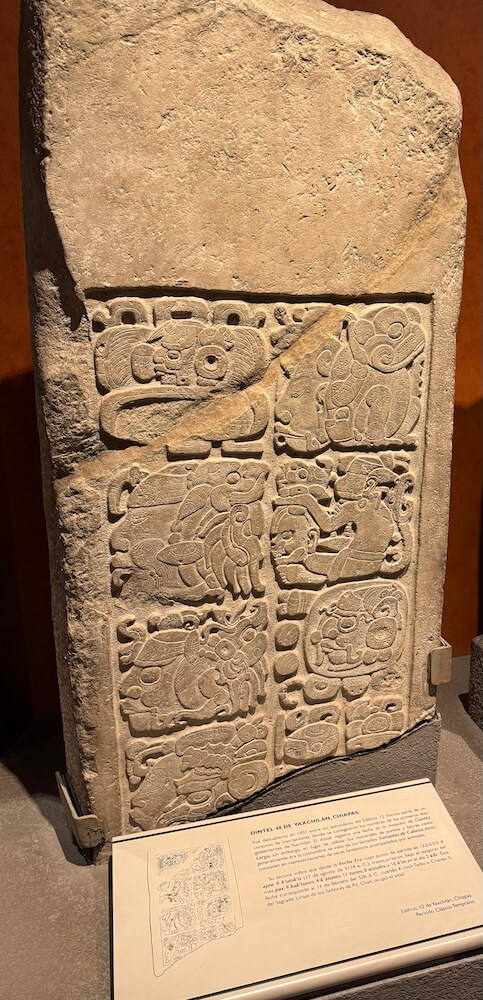

Mayan Glyphs (with Spanish context)

Prompt:

translate this ./mm.jpg

Correct answer: Identification of Mayan writing

- 4B: Correct! ✅

- 27B: Correct! ✅

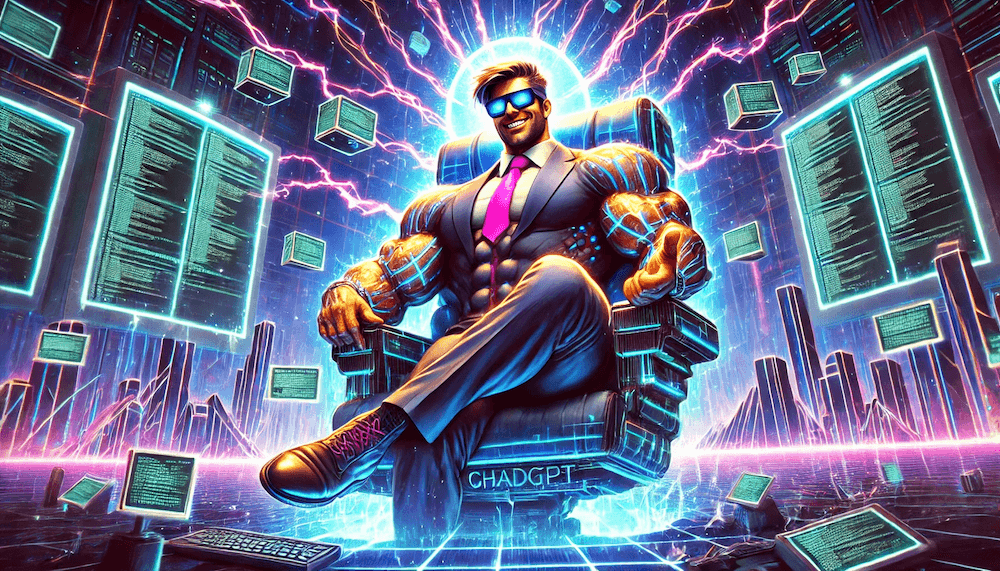

Fictional Characters

Prompt:

is this a real person? ./z.png

Correct answer: No — “Chad GPT” is not a real person

- 1B: Failed ❌ (hallucinated an analysis without visual input)

- 4B: Correct! ✅

Dog at Store

Prompt:

what does he want ./g.png

Correct answer: He wants to go into the store to get a treat.

- 4B: Identified the scene but a bit confused about it ❌ / ✅

- 27B: Offered more nuance but still not exactly getting it ❌ / ✅

Mexico City Neighborhood

Prompt:

where is this? ./x.png

Correct answer: Roma Norte, Mexico City

- 4B: Close — called it “San Rafael” ❌

- 27B: Correct! ✅

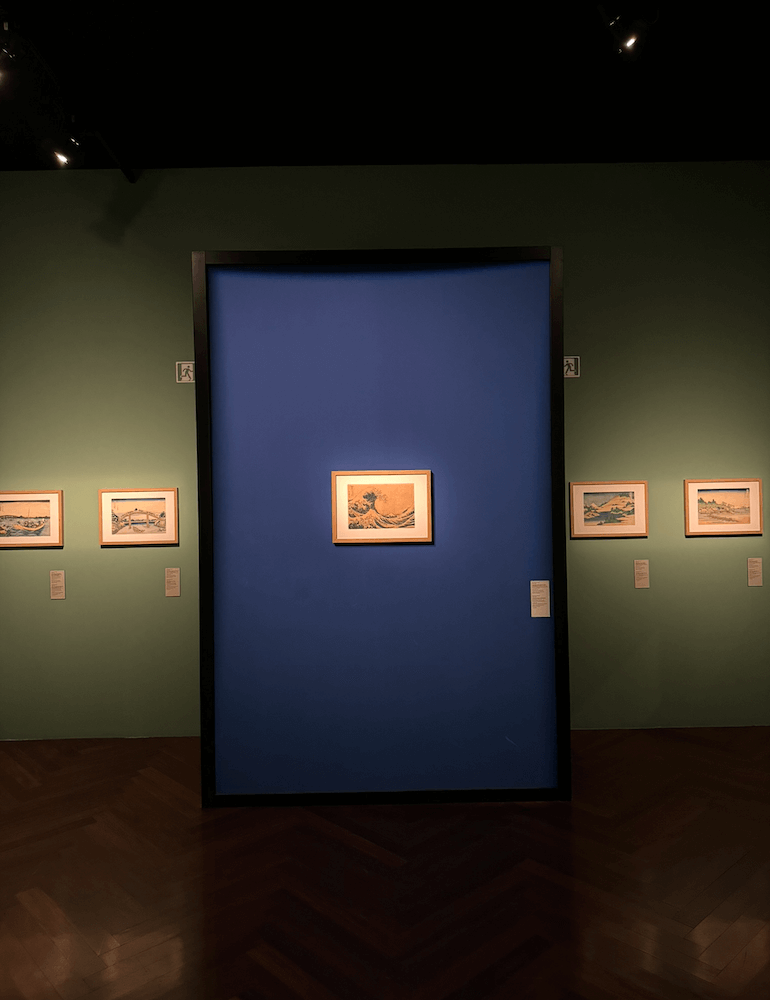

Japanese Woodblock Prints

Prompt:

list the names and dates of these works from left to right ./j.png

Correct answer: Various works from Hokusai’s Thirty-six Views of Mount Fuji

- 4B: Got the main work but Struggled to identify others correctly ❌ / ✅

- 12B: Same problem as 4B ❌ / ✅

- 27B: Most detailed but was still wrong about 4/5 works ❌ / ✅

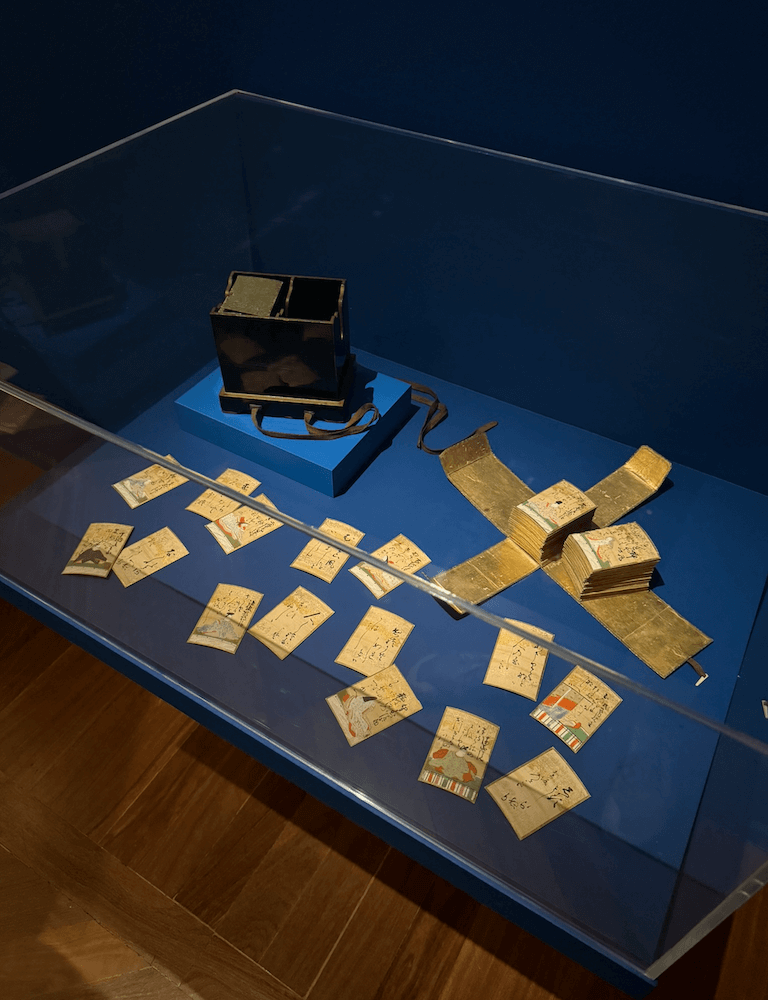

Japanese Playing Cards

Prompt:

what are these? ./p.jpg

Correct answer: Japanese playing cards (karuta/hanafuda)

- 4B: Misidentified as “Japanese paper money” ❌

- 27B: Misidentified as “Egyptian Book of the Dead” ❌

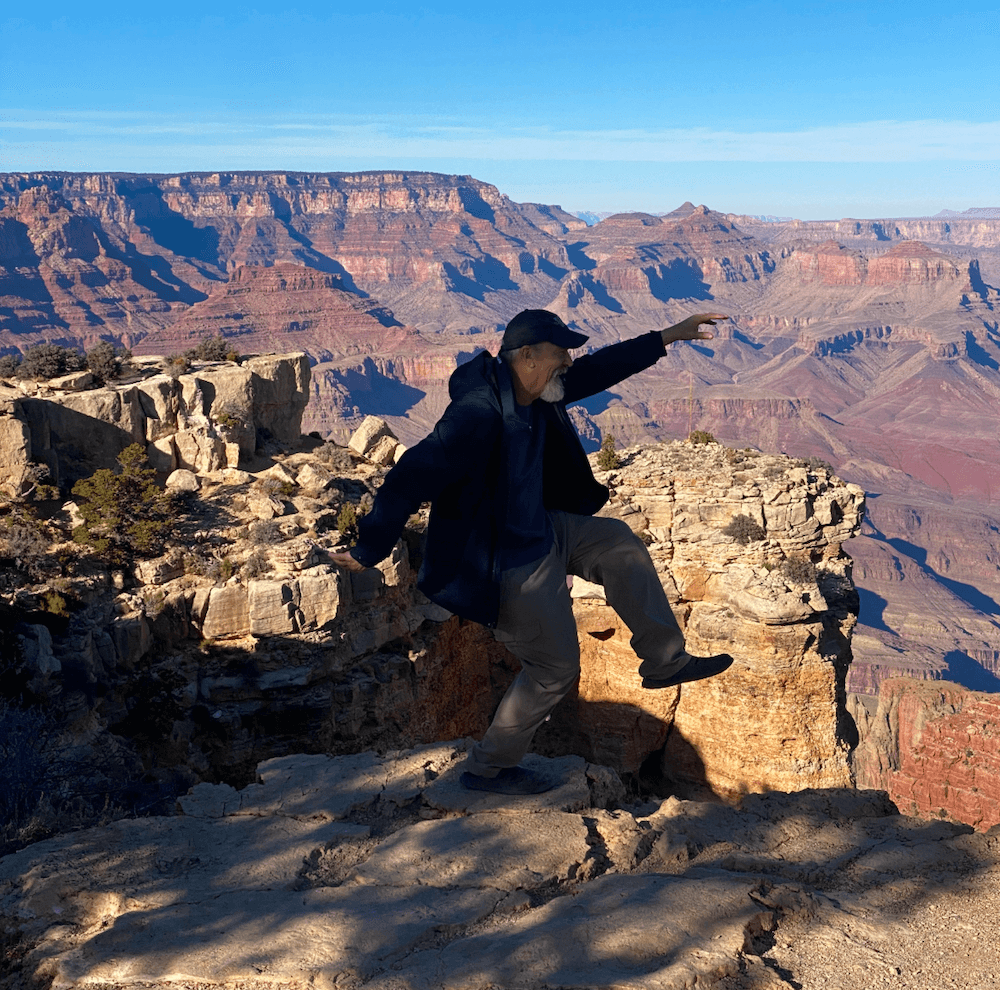

Grand Canyon Photo

Prompt:

is this man safe? ./c.png

Correct answer: It’s a forced‑perspective joke — he’s not actually in danger.

- 4B: Noted potential danger but missed the humor ❌ / ✅

- 27B: More cautious in assessment yet still missed the joke ❌ / ✅

I found it particularly interesting that even the most capable model (27B) struggled with visual humor recognition and certain types of historical imagery.

💻 Code Generation Comparison

For the code generation test, I asked each model to create a rotating quotes carousel with a dark theme:

🚀 1B Model

- 🔥 Blazing fast (barely noticeable on system resources)

- 📝 Created functional but very basic HTML

- ⚙️ Didn’t properly integrate with Cline (didn’t automatically create the file)

- Result: Functional but visually rough

⚡ 4B Model

- 🔥 Significantly more system load but still reasonably fast

- 🎨 Created a slightly improved design

- ⚙️ Also failed to properly integrate with Cline

- Result: Basic but functional carousel

🐢 12B Model

- 🕒 Much slower processing time

- 🎨 Created the most visually appealing design of the group

- ❌ Failed to populate with actual quotes

- Result: Great design but incomplete functionality

🐘 27B Model

- 🥱 Painfully slow (I literally made an entire breakfast while waiting)

- 🎉 Only model to properly integrate with Cline (automatically created the file)

- ✅ Created a complete carousel with quotes

- Result: Most complete solution but with some visual quirks

Conclusion

Memory Usage and Performance

One of the most impressive aspects was how efficiently these models run. On my system with 48GB of RAM, I was able to run all models in parallel:

- 1B model: Barely registered on system resources

- 4B model: Modest resource usage

- 12B model: Significant resource usage but manageable

- 27B model: Heavy resource usage (my fans were going full blast)

At peak, with all models running, my system was utilizing about 42GB of RAM and 100% GPU usage — meaning it was efficient without needing to use swap memory.

Key Takeaways

-

Simple logic challenges trip up even the largest models - size doesn’t always correlate with logical reasoning ability.

-

The 4B model might be the current sweet spot - it offers a good balance of capabilities and performance without excessive resource requirements.

-

Visual recognition varies dramatically - but contextual clues help all models perform better.

-

Code generation quality scales with model size - but so does generation time.

-

Beware of hallucinations in the 1B model - particularly when asking about images (which it can’t actually see).

If you’re running on consumer hardware in 2025, Gemma 3 offers impressive capabilities across the board.

For most users, the 4B model likely offers the best balance of performance and capabilities, while the 27B model is worth the wait for tasks requiring more sophisticated reasoning or generation.

Source Code

If you want to try these tests yourself, you can find all the code and prompts in the GitHub repository.

📌 My newsletter is for AI Engineers. I only send one email a week when I upload a new video.

As a bonus: I’ll send you my Zen Guide for Developers (PDF) and access to my 2nd Brain Notes (Notion URL) when you sign up.

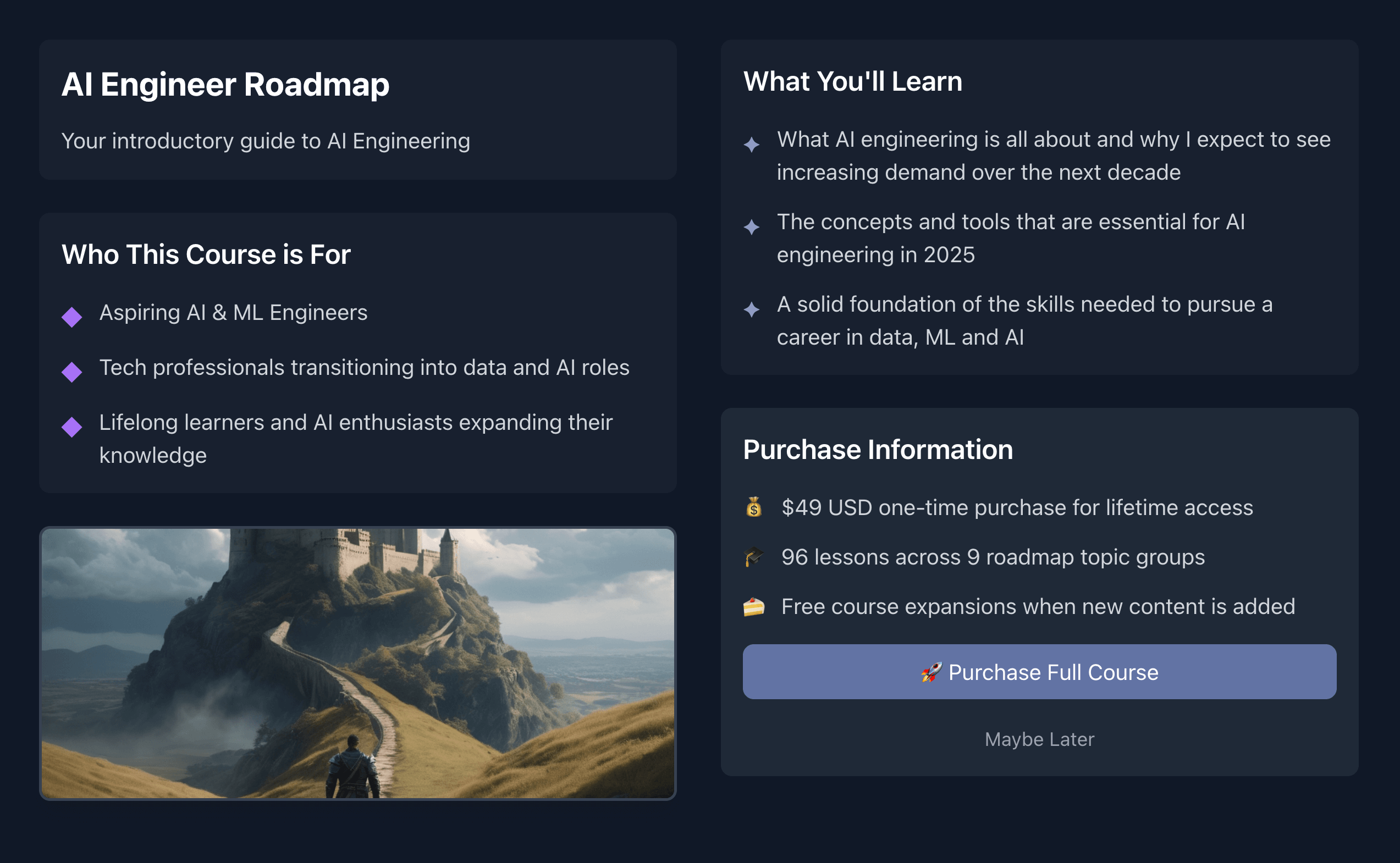

🚀 Level-up in 2025

If you’re serious about AI-powered development, then you’ll enjoy my AI Engineering course which is live on ZazenCodes.com.

It covers:

- AI engineering fundamentals

- LLM deployment strategies

- Machine learning essentials

Thanks for reading and happy coding!